Hi there!

First of all, forgive me if I am writing this new topic in the wrong place, I am newbie here and I was not able to decide where to put this.

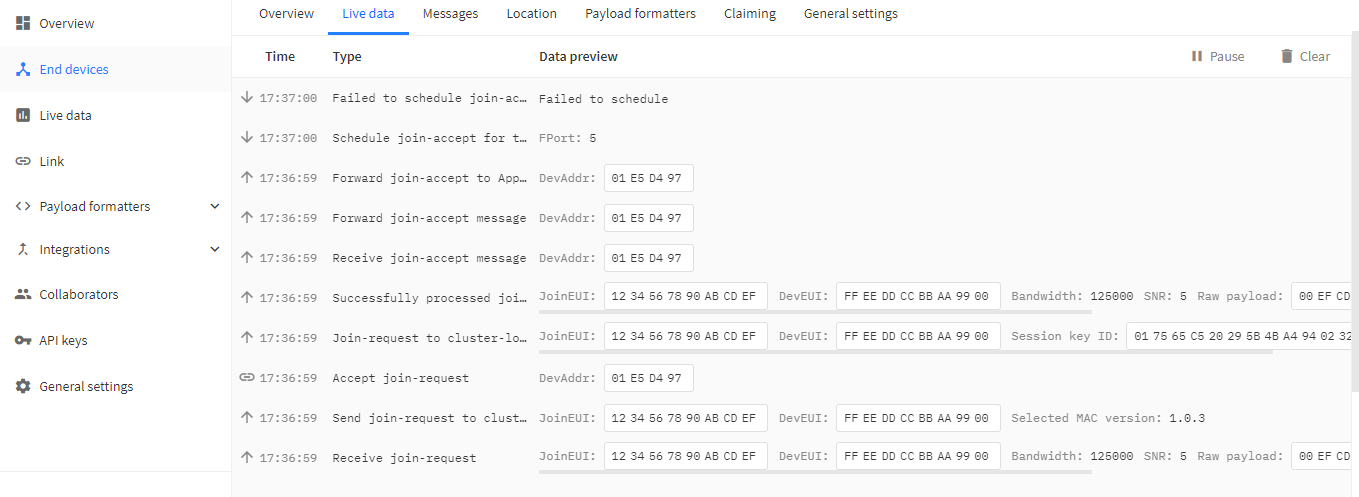

Anyway, I would like to explain as much as possible the issue I have, and it is related to the Semtech UDP Packet Forwarder communication. I am developing an application to simulate some LoRaWAN devices, and I need to comunicate them to the TTN platform. I downloaded the TTN stack (with dockers), and I was able to set it up properly, since everything is working well regarding to the gateway “stat” packets, but when I try to simulate the “join-accept” procedure, something weird happens when I execute it three times in a row, and from that, it answers with an error message just as it is shown below:

More clues:

- The issue happens when this is executed in docker stack, but when I execute the same code in the things network console (https://console.thethingsnetwork.org/) it works perfectly every time!

- Device -> MAC V1.0.3 & PHY V1.0.3 REV A.

- The testing use cases I have performed were the following ones:

A)

GW connect

ND connect

ND disconnect

…~2min…

ND connect

OK

B)

GW connect

ND connect

ND disconnect

ND connect

!OK

C)

GW connect

ND connect

ND disconnect

ND connect

!OK

GW disconnect

ND connect

OK

Is there a configuration difference between both the console and the docker? Is there something I am missing? Thanks beforehand, looking forward to hearing from you.

Kind regards.

Daniel.