Sounds like a potential Cluster Fsuk for users of COTS products or devices in the field and/or where they dont have immediate control of firmware and who for many users/deployers who may do little more that turn a device on or maybe register details in console before doing so but whome dont know one end of a cli from an ilc and cant/wont engage with such shinnanigans…

Absolutely - and we can feedback that the ‘give up trying to tell the node’ could do with looking at. Or at the least, we can validate the give up count but I do have a couple of nodes with some stupid downlink counts.

But to reinforce, for those that do need to control the rate from the device without intervention from the Network Server, the ability to to turn off any form of ADR is being looked at.

As I see or understand it, there are two trains of thought here.

-

Turn off ADR.

-

Use ADR setting to try and optimize your nodes settings automatically, where the NS assist you via the predetermined parameters you selected.

I high capacity microwave links we have similar assistance, called AGC, as the fade margins and link conditions change the system adjust the TX power. So you can have a relative low TX power to have the optimal RX level. As the fade increases the TX power is increased to maintain the same optimal RX level. As fade increases, you can safely increase the TX power as the interference with adjacent systems will be constant, as the RF path to that system experiences the same amount of increase in fade

This way you are minimizing the interference with adjacent systems (nodes).

Are ADR not achieving this where lower TX power and DR is maintained (if correctly setup) until you are experiencing changes in RF conditions, change in SNR?

In this manner you can have an increase of the amount of RF devices in the same geographical area.

Note: I have seen thought that with this type of automatic control via some clever calculations. When a new RF device is introduced to mix, things can take a while to stabilize. You sometimes need to manually interfere with the settings on one or two devices and then everything is happy again.

Potentially. But not many people are going to turn off ADR or indeed be on ABP, very few on ABP and then turning off ADR. And if they are on ABP and go on the console and find the turn off ADR button, I’d like to think they know what they are about and/or are sensible enough to subscribe to the various support channels, which is why this thread exists after some independent testing and an extensive discussion with one of the senior TTI developers, moving to a Zoom call to dig in to the details. And then there is the monitoring, see below.

As for firmware, this may feel like a little harsh, but if you don’t have a risk analysis doc for all the ways it can go wrong when it’s out in the field plus a test plan that makes NASA using SRB’s in the cold look delinquent, more fool you. Makers deploying stuff they can get to to fix issues is fine, I’ve certainly got plenty of them out in the field for community use but when you are making something that is being deployed in to distribution and then out in to the field in the 1,000’s, it’s a whole different ball game. Or in some countries I’ve co-developed for, 5 hours drive away. I (well, the production manager aka The Boss aka The Wife) make devices that go in to fire alarms, we’ve been achieving Six Sigma for about 15 years.

For those doing TTN Mapper projects, then fixing your DR to SF7 (yeah, enjoy the dual contra-scales, way to go LoRa standards), it’s as simple as putting an explicit API call to fix the DR/SF (FYI, both LoRaMAC-node & LMIC use DR) before each uplink.

If you have are deploying devices professionally, then your monitoring systems should highlight that there has been a change in energy usage / transmission parameters. And your change log should correlate with these alerts. And any uplink over SF10 should light up the dashboard, as SF11/12 are prohibited for long term use.

Sure, this isn’t ideal, but totally fixable with this information and is being worked on. We’ve had plenty of other ‘moments’ with TTS deployment, I’d call this as being a niche issue that if you’re paying attention shouldn’t be an issue.

Yes, but LoRaWAN doesn’t respond that fast as environmental conditions can affect individual uplinks so a hair trigger response isn’t appropriate and there is a penalty to sending a downlink.

Consider that there are five settings for ADR in the CLI:

adr-ack-delay-exponent

adr-ack-limit-exponent

adr-data-rate-index

adr-nb-trans

adr-tx-power-index

Although I’m not clear on which ones can be explicitly set as there is current, desired, pending and MAC-state plus MAC-settings.

But overall, a lot of control over how a device is commanded and how quickly it should be told or confirm it has set the new parameters.

One of the considerations for managing devices is being able to choose which device a gateway can send a downlink to - as having a pile of devices where the RF environment has changed doesn’t mean it’s appropriate to hammer the area with downlinks to alter DR’s to suit what may be a temporary situation. Which would be where the gateway is ‘compromised’. If it’s an individual device, that would be different. God knows how the Network Server could determine in semi-realtime which devices have had a food-truck parked next to it out of a potential 5,000 other devices that a gateway may be serving.

I help teach GCSE & A Level Computer Science, this one would be way way outside the scope of a degree level algorithm. Kudos to the TTI team for managing to wrap their heads around these non-trivial algorithm conundrums.

I have a number of commercial devices which I know are out in the field in thousands or may-be even hundred thousands with firmware which is at least questionable with regards to how robust the stack is.

May-be even worse, I’ve been working on porting lora-mac to a new hardware platform which is really close to the reference platform and while working on it I found really interesting issues. So even the ‘gold reference’ is not up to your standard.

I don’t have time or funds or, indeed, feel the need to rewrite the stacks. And I too have a laundry list of ‘features’ that I’d like to have resolved if I can figure out how to validate / repeat the issues.

But I can put in mitigation for any issues with bounded loops, hardware checks and software as well as the hardware watchdog. And if I’m going to use an unusual configuration, ensure there is a failsafe interaction available to command on/off & parameters.

But overall, the IoT sensing projects aren’t connected to any form of fire, safety or security systems, so there is less benefit in striving for zero defects.

- Disable ADR.

- Node TX in SF7.

- CLI used to fix to SF7 with following command:

ttn-lw-cli end-devices set xxxxxxxxxx xxxxxxxxxxx --mac-state.desired-parameters.adr-data-rate-index DATA_RATE_5

The server still sends the DR0 change in opts field. Same steps tried with ADR disabled as well.

The current SNR is -6.8 and RSSI is -118 (approx 10KM). So I can’t escape the ADR request from server when ADR is enabled. (Tried increasing the ADR margin as well)

when ADR is disabled, the CLI fix is not working. CLI used is v3.18.0

Gateway is not able to reach the node (not able to down-link at this distance)

Is there anything I am doing wrong?

That’s not sensible. Reciprocity means that a node which can uplink should be able to be donwlinked to, bar implementation errors.

It is able to up-link and downlink in better SNR. But yes I need to check, but the current problem of SF12 is of higher priority.

and

Appear to contradict each other - you have to turn off ADR on the console and then tell the NS, via the CLI, what DR you want the device to use - if the device uses that DR, it won’t get commanded, if it doesn’t, it will.

But I’m impressed you get 10KM on DR5 so maybe the device is doing something different.

Having spoken at length to a senior TTI engineer about this issue I still took a few minutes to check it was as expected.

But there is still the join issue that can’t be circumvented.

Can you check your details so we can understand what sequence of tests you tried.

I did exactly the same thing.

Typo! Sorry!

Since things didn’t work as expected after asking through CLI and TX in SF7 itself. I just tried the same step with ADR enabled. I will edit the original. Dont mind that sentence, as I just tried.

Currently.

And currently transmitting in SF7

Around these part we don’t edit after people have commented as it makes a nonsense of the subsequent posts. I’ve reversed the edit.

So, you’ve disabled ADR on the console, set the DR to 5 using the CLI and the device is transmitting at DR5. Which seems to be working as advertised.

Its working but I get SF12 request from NS.

I will keep that in mind for the pointed out comments.

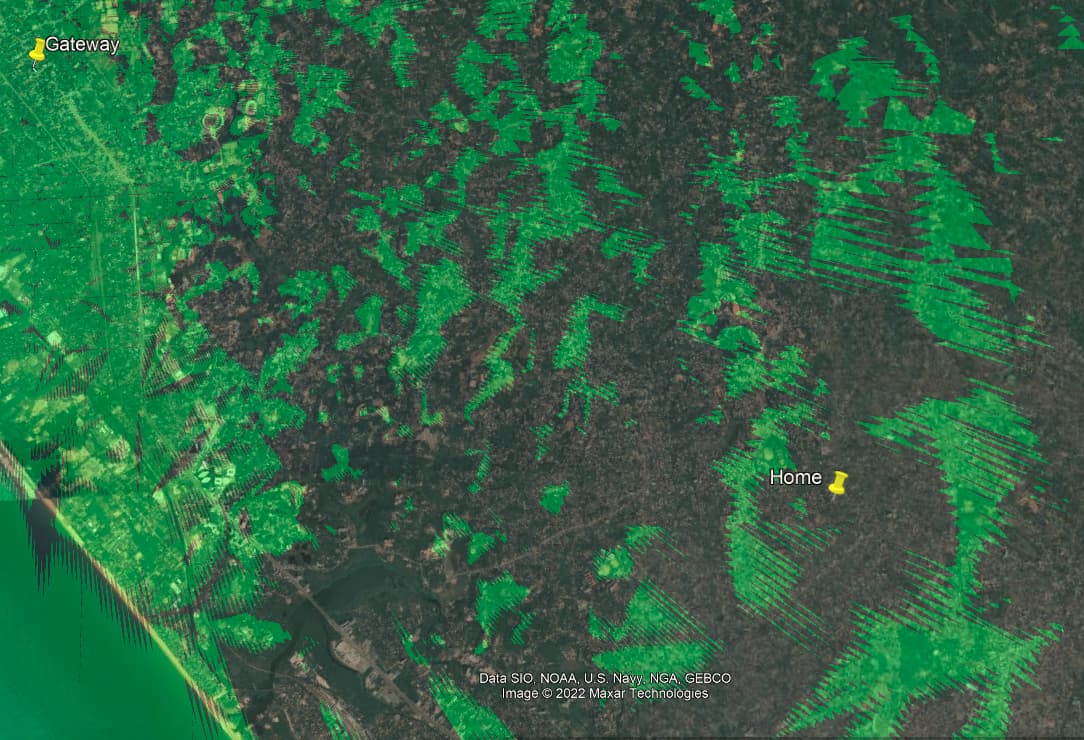

Home is 4 floor high, so may be thats why I am getting it. Other coverage simulations also shows similar range. Not relevant to the discussion, but just an input to the statement. The node at home is transmitting at 20dBm with 2dBi antenna.

![]()

Where in the world are you to be allowed to use 20 dBm ERP?

From one of his other, earlier posts on another thread:-

"The legal limits of transmission are 4watt EIRP (1 watt maximum) in 865-867. Location is India (South).

Both are transmitting only at 20dBm."

I’m going to stop now, because you said that it was transmitting at SF7 which appeared to say that it was working, but now you add this detail after the fact. I’ll concentrate on topics I can keep up with.

@sniper4uonline has clearly explained that they’ve told TTN the node should be using SF7, it is using SF7, but it’s being told by TTN to use SF12.

That’s obviously a problem.

Yes, indeed, well, not that obvious as the feedback failed to mention this pertinent detail until I sought clarification. I’ve detailed the problem as I currently understand it in the original post for this topic, as acknowledged by a TTI engineer, a problem I’ve spent time independently verifying and discussing with TTI as to current work-arounds and future amelioration.

When I created a test ABP device with ADR turned off on the console I saw it going to SF12, DR0, as per the database default (of zero) and as reported. I could get the device to stay on the DR I wanted without firmware changes when I updated the settings as detailed above. In some respects the current situation is useful as it effectively allows someone to do ADR to their own algorithm by looking at the signal strength and adjusting the desired DR as they see fit.

But like the original threads that spawned the topic, the resulting feedback is conflicting and confusing. If the OP wants to use ABP in the manner he desires, he’s more than welcome to take it up on Slack with TTI directly.

I’ve tried to document the situation for others so they can take appropriate action but I can neither mandate changes to other peoples firmware nor mandate updates to TTS. And the solution worked for me when I tried it but I do not currently have the capacity to take fragmented reports & piece them together, so the onus is on anyone who needs help to provide a detailed & structured report and even then, I may have my hands full with other things.

Server is responding as mentioned by @descartes. I tried changing the SF to 7, 8 etc. It is working. The server is no more asking SF12 (DR0). (Using CLI as mentioned by @descartes)

This would have been some setup mistake from my side. Along with the mistake my node was not able to receive message from server. So server kept asking status of node along with SF7 DR5 request this time as there was no reply to status query. This is normal procedure when a ABP node joins.

So since the server was not getting the status message back, it kept asking for status messages. (tried for atleast 2000 messages, it doesn’t stop)

So in short:

- DR0 request from server was solved using the solution provided by @descartes

- I kept receiving status request from server until the node replied with status in mac field.

The details below is not relevant, just an added info.

Somehow the node received one of the status request from gateway (few minutes back).

Request from server:

opts field: 060350FF0001

Meaning:

Server is requesting status of the end device

Data rate: LoRa: SF7 / 125 kHz

Power: Max EIRP

Usable channels with LSB as Channel 0: ff00

The number of transmission for each uplink message 1

The channel mask will be enabled as 0

Reply to server:

opts field: 06FF370307

Meaning:

The device was not able to measure the battery level

The margin (SNR in dB): -9

Power Accepted by the end node

Data rate accepted by node

Channel mask interpreted and all channel states set

This solved everything and I could put up everything together as there was no further request from server.

So there could be one more solution possible in the scenario, if somehow the gateway is not able to make it upto the node and only the node is able to communicate, which is ok for a mapper node, if there was an option to disable status request then it would have been great, to avoid unnecessary TX from gateway. (tried 0 fields in status in console to disable, didn’t work possibly because its a usual join procedure of ABP, minimum one status message back to gateway)