Since moving from primarily Microchip (RN2903) nodes on AU915, to a variety of nodes (eg. Seeeduino’s RisingHF and mDot’s SX1272) on AS923, I’ve never had reliable joins on TTN. After nearly a year of accepting it, I’ve gone digging for a reason. Here’s what I’ve found:

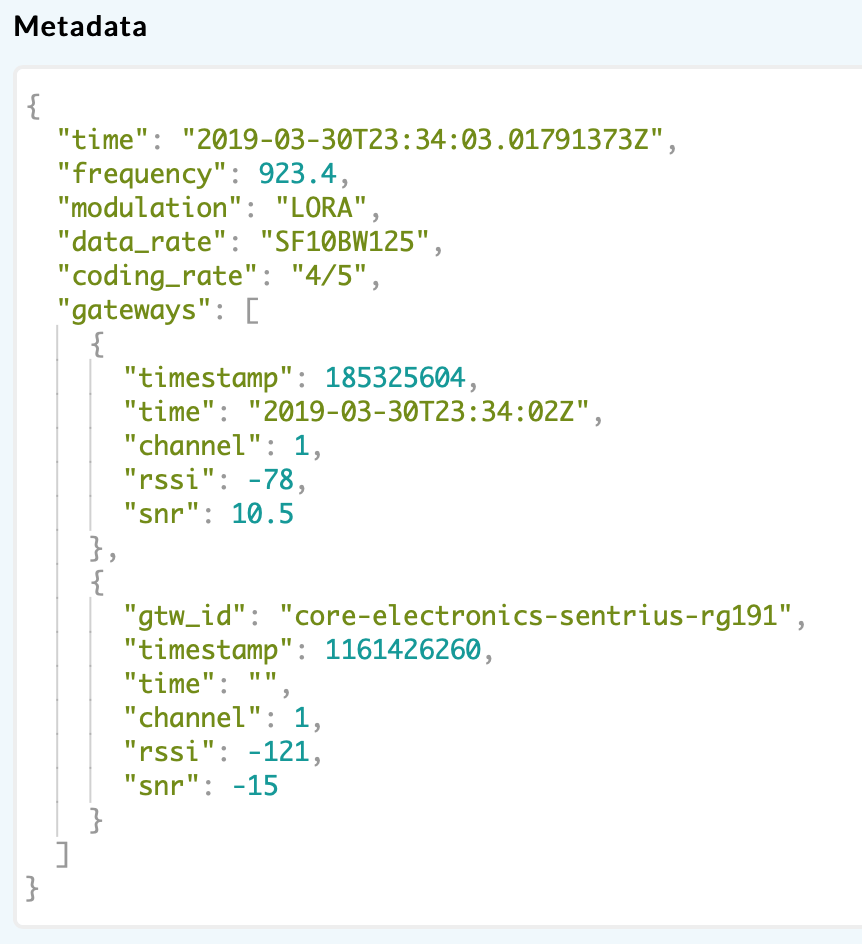

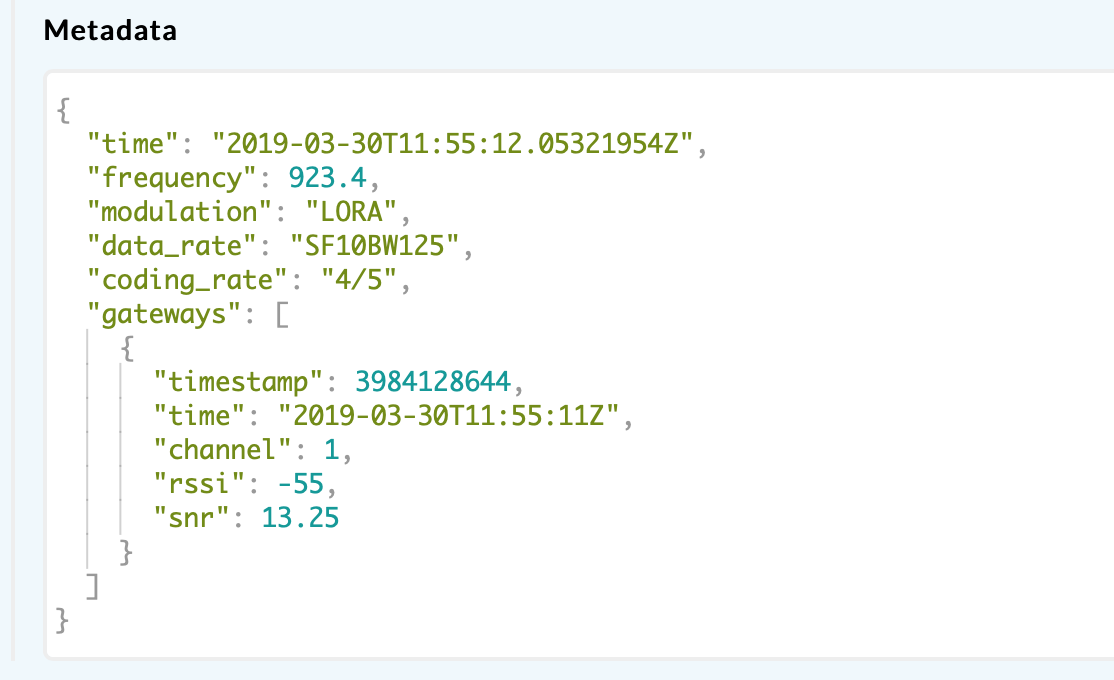

The join-request looks fine, and reaches the Application Server in the console:

The only strange thing I see is that the gateway’s ID usually doesn’t appear.

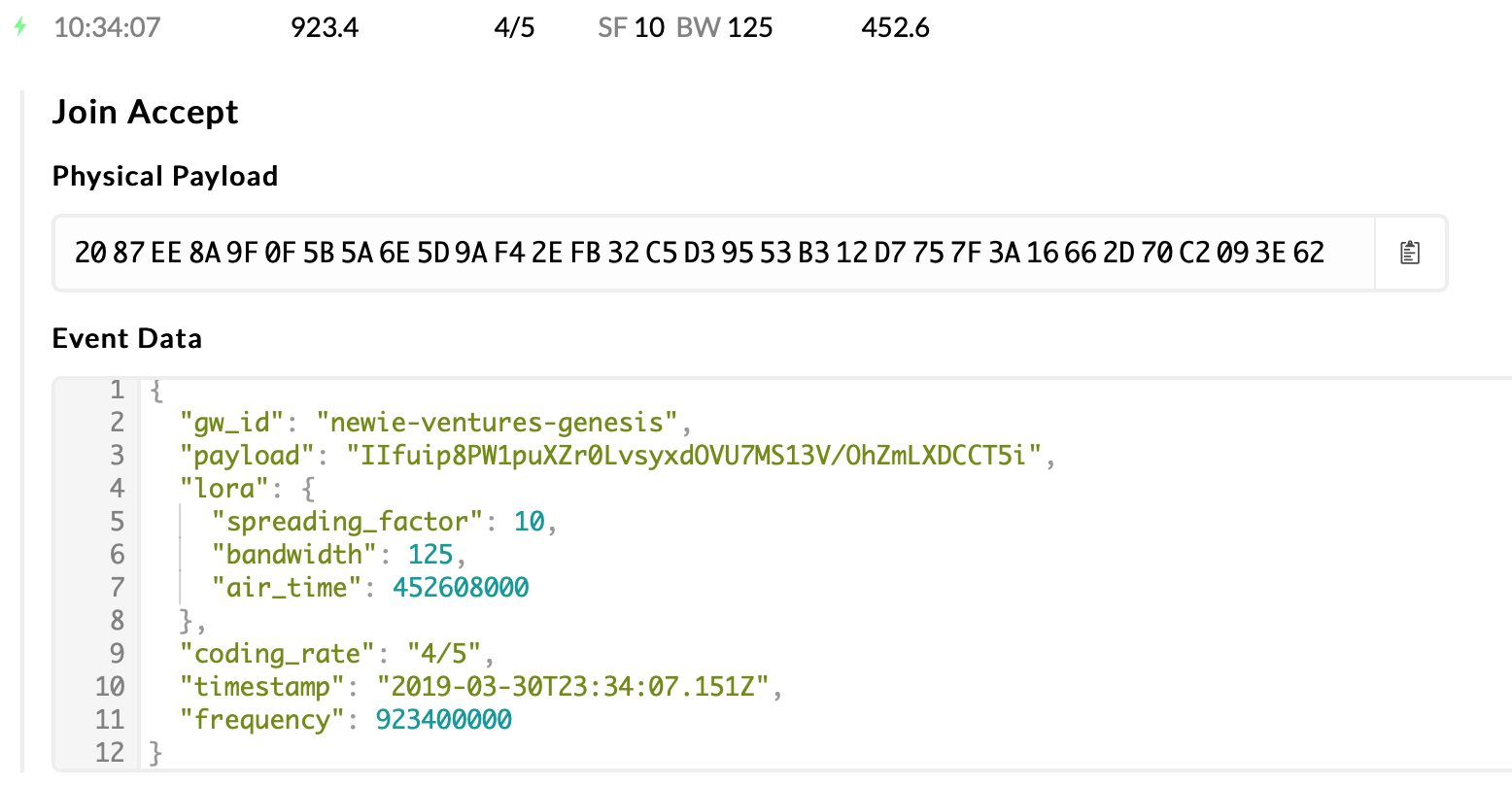

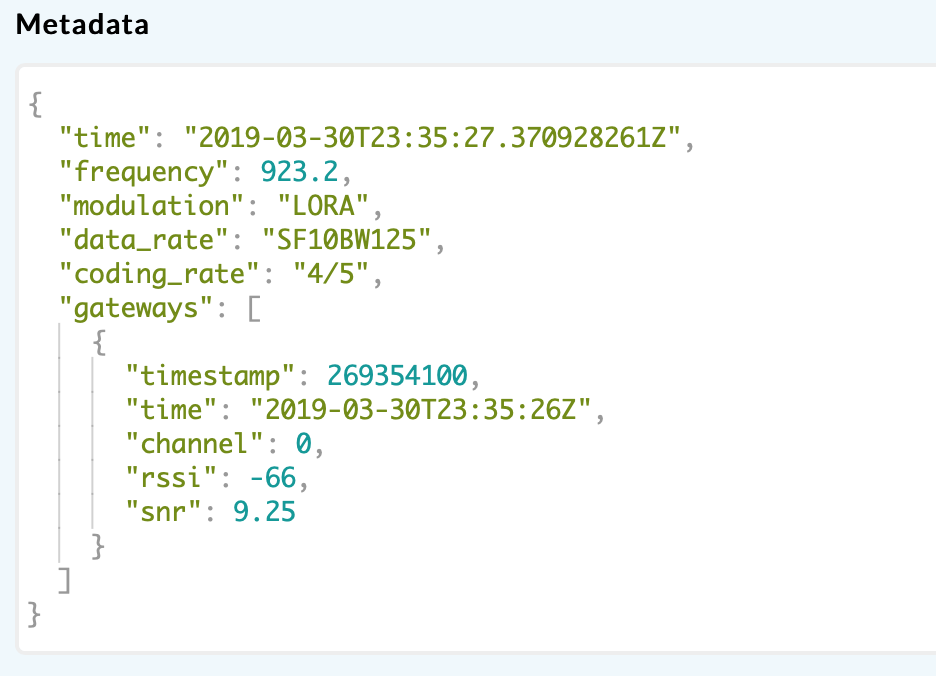

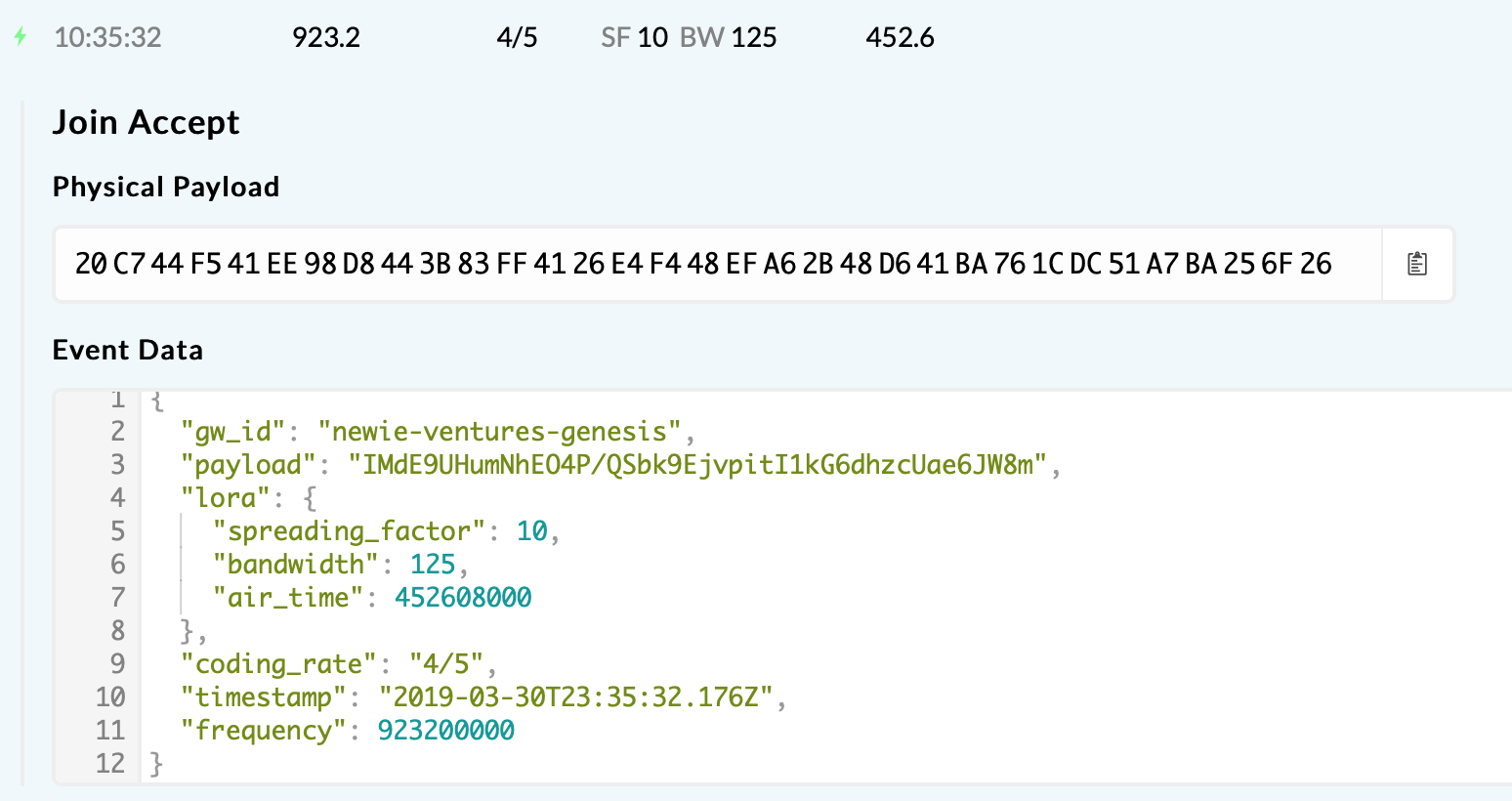

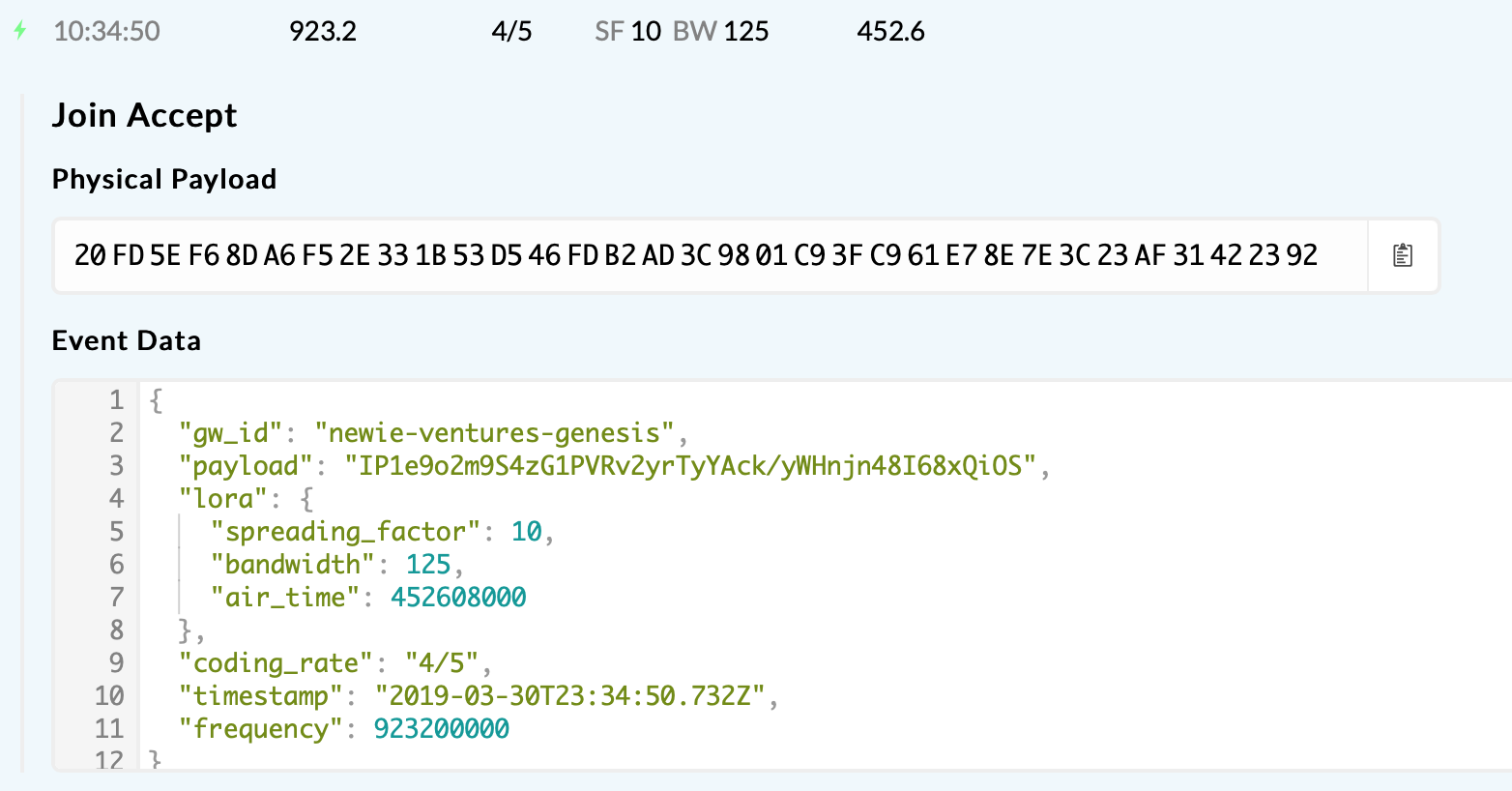

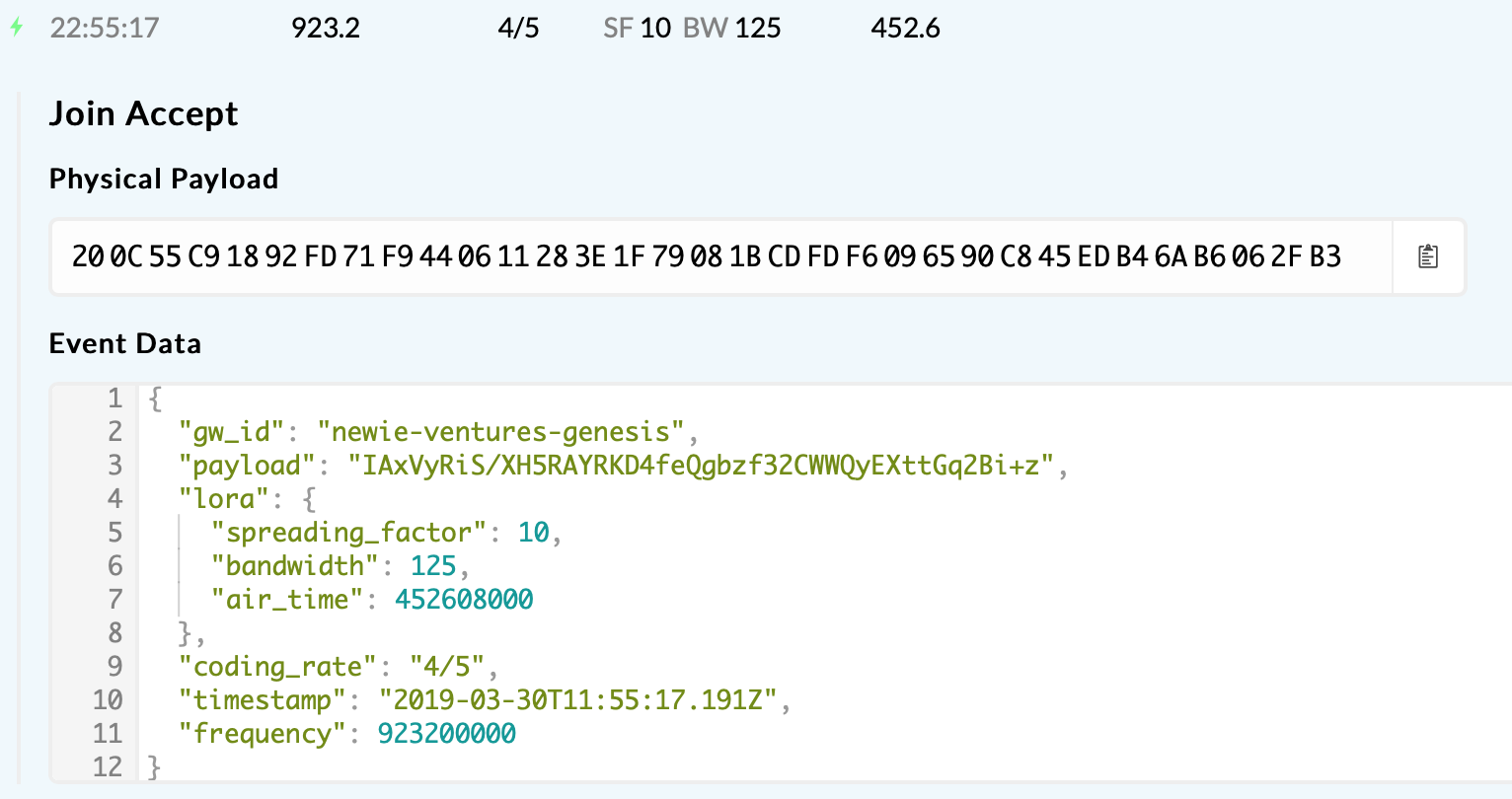

But regardless, the join-accept also looks fine. Here’s how it looks at the gateway console:

The gateway itself is a MultiTech Conduit running kersing’s mp-packet-forwarder and it looks to handle the join-accept fine:

22:55:12 INFO: [up] TTN lora packet send to server "thethings.meshed.com.au"

22:55:17 INFO: [down] TTN received downlink with payload

22:55:17 INFO: [TTN] downlink 33 bytes

INFO: Enabling TX notch filter

66.d9.99.a.5f.13.b5.9.0.9a.21.10.0.8.0.0.20.8c.5c.5c.a0.df.e5.50.72.e3.d2.cd.9f.95.6a.14.95.a1.bb.7f.a.9e.4a.bf.d0.3f.48.bd.fb.a3.51.cb.ed.end

INFO: tx_start_delay=1495 (1495.500000) - (1497, bw_delay=1.500000, notch_delay=0.000000)

And finally, the mDot itself looks to be behaving correctly:

|2019-03-30 22:55:07|[INFO] =====================|

|---|---|

|2019-03-30 22:55:07|[INFO] general configuration|

|2019-03-30 22:55:07|[INFO] =====================|

|2019-03-30 22:55:07|[INFO] version ------------------ 3.0.0-mbed144|

|2019-03-30 22:55:08|[INFO] device ID/EUI ------------ 008000000001361f|

|2019-03-30 22:55:08|[INFO] default channel plan ----- US915|

|2019-03-30 22:55:08|[INFO] current channel plan ----- AS923|

|2019-03-30 22:55:08|[INFO] public network ----------- on|

|2019-03-30 22:55:08|[INFO] =========================|

|2019-03-30 22:55:08|[INFO] credentials configuration|

|2019-03-30 22:55:08|[INFO] =========================|

|2019-03-30 22:55:08|[INFO] device class ------------- A|

|2019-03-30 22:55:08|[INFO] network join mode -------- AUTO_OTA|

|2019-03-30 22:55:08|[INFO] network name -------------|

|2019-03-30 22:55:08|[INFO] network phrase -----------|

|2019-03-30 22:55:08|[INFO] network EUI -------------- 70b3d57ed000c0b7|

|2019-03-30 22:55:08|[INFO] network KEY -------------- XXX|

|2019-03-30 22:55:08|[INFO] ========================|

|2019-03-30 22:55:08|[INFO] communication parameters|

|2019-03-30 22:55:08|[INFO] ========================|

|2019-03-30 22:55:08|[INFO] acks --------------------- off, 0 attempts|

|2019-03-30 22:55:08|[INFO] TX datarate -------------- DR0|

|2019-03-30 22:55:08|[INFO] TX power ----------------- 20 dBm|

|2019-03-30 22:55:08|[INFO] antenna gain ------------- 3 dBm|

|2019-03-30 22:55:08|[INFO] LBT ---------------------- off|

|2019-03-30 22:55:08|[INFO] ==================|

|2019-03-30 22:55:08|[INFO] receive parameters|

|2019-03-30 22:55:08|[INFO] ==================|

|2019-03-30 22:55:08|[INFO] Join delay --------------- 5 s|

|2019-03-30 22:55:08|[INFO] Join rx1 dr offset ------- 0|

|2019-03-30 22:55:09|[INFO] Join rx2 dr -------------- 2|

|2019-03-30 22:55:09|[INFO] Join rx2 freq ------------ 923200000 Hz|

|2019-03-30 22:55:09|[INFO] Receive delay ------------ 1 s|

|2019-03-30 22:55:09|[INFO] ==================|

|2019-03-30 22:55:10|[INFO] attempt 1 to join network|

|2019-03-30 22:55:10|[INFO] Send join request RxDelay: 5 Rx1Offset: 0 Rx2Freq: 923200000 Rx2Dr: 2|

|2019-03-30 22:55:10|[INFO] Configure radio for TX|

|2019-03-30 22:55:10|[DEBUG] Session pwr: 20 ant: 3 max: 20|

|2019-03-30 22:55:10|[DEBUG] Radio Power index: 16 output: 17 total: 20|

|2019-03-30 22:55:10|[DEBUG] TX PWR: 16 DR: 2 SF: 10 BW: 0 CR: 1 PL: 8 CRC: 1 IQ: 0|

|2019-03-30 22:55:11|[INFO] Configure radio for TX|

|2019-03-30 22:55:11|[DEBUG] Session pwr: 20 ant: 3 max: 20|

|2019-03-30 22:55:11|[DEBUG] Radio Power index: 16 output: 17 total: 20|

|2019-03-30 22:55:11|[DEBUG] TX PWR: 16 DR: 2 SF: 10 BW: 0 CR: 1 PL: 8 CRC: 1 IQ: 0|

|2019-03-30 22:55:11|[DEBUG] Using channel 1 : 923400000|

|2019-03-30 22:55:11|[INFO] Configure radio for TX|

|2019-03-30 22:55:11|[DEBUG] Session pwr: 20 ant: 3 max: 20|

|2019-03-30 22:55:11|[DEBUG] Radio Power index: 16 output: 17 total: 20|

|2019-03-30 22:55:11|[DEBUG] TX PWR: 16 DR: 2 SF: 10 BW: 0 CR: 1 PL: 8 CRC: 1 IQ: 0|

|2019-03-30 22:55:11|[INFO] Configure radio for TX|

|2019-03-30 22:55:11|[DEBUG] Session pwr: 20 ant: 3 max: 20|

|2019-03-30 22:55:11|[DEBUG] Radio Power index: 16 output: 17 total: 20|

|2019-03-30 22:55:11|[DEBUG] TX PWR: 16 DR: 2 SF: 10 BW: 0 CR: 1 PL: 8 CRC: 1 IQ: 0|

|2019-03-30 22:55:11|[DEBUG] update TOE: freq: 923400000 i:0 toa: 370 DC:0|

|2019-03-30 22:55:12|[DEBUG] mDotEvent - TxDone|

|2019-03-30 22:55:17|[INFO] Rx Window 1|

|2019-03-30 22:55:18|[INFO] Rx Window 2|

|2019-03-30 22:55:18|[ERROR] Failed to join network|

I’ve studied the logs intensely and can see that:

- The mDot appears to send a well formed

join-requestwith DR 2 (SF10BW125). - Five seconds later the expected gateway sends a well formed

join-accept, also with DR 2 (SF10BW125) - The mDot waits the required 5 seconds for the

join-accept, but never receives it.

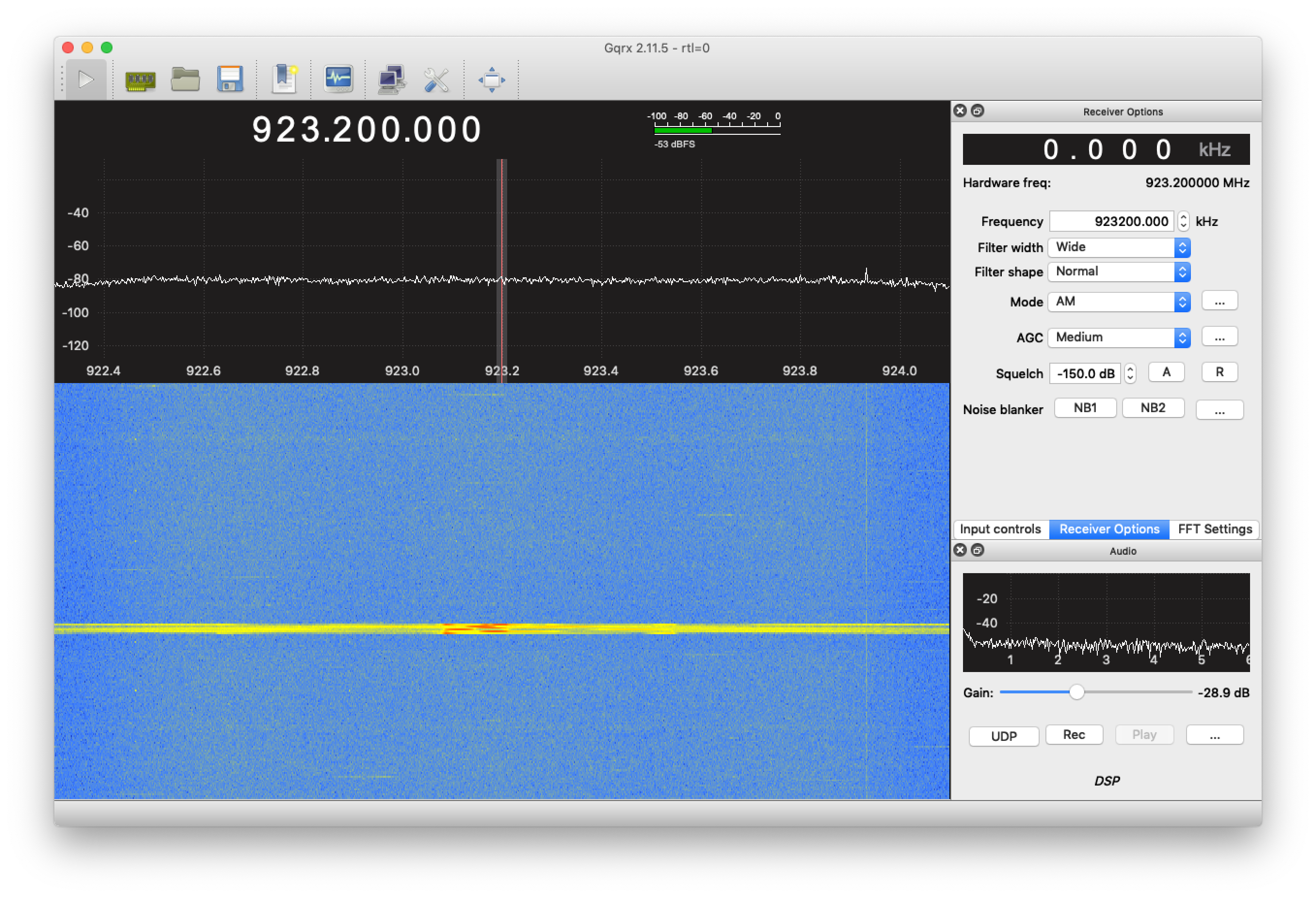

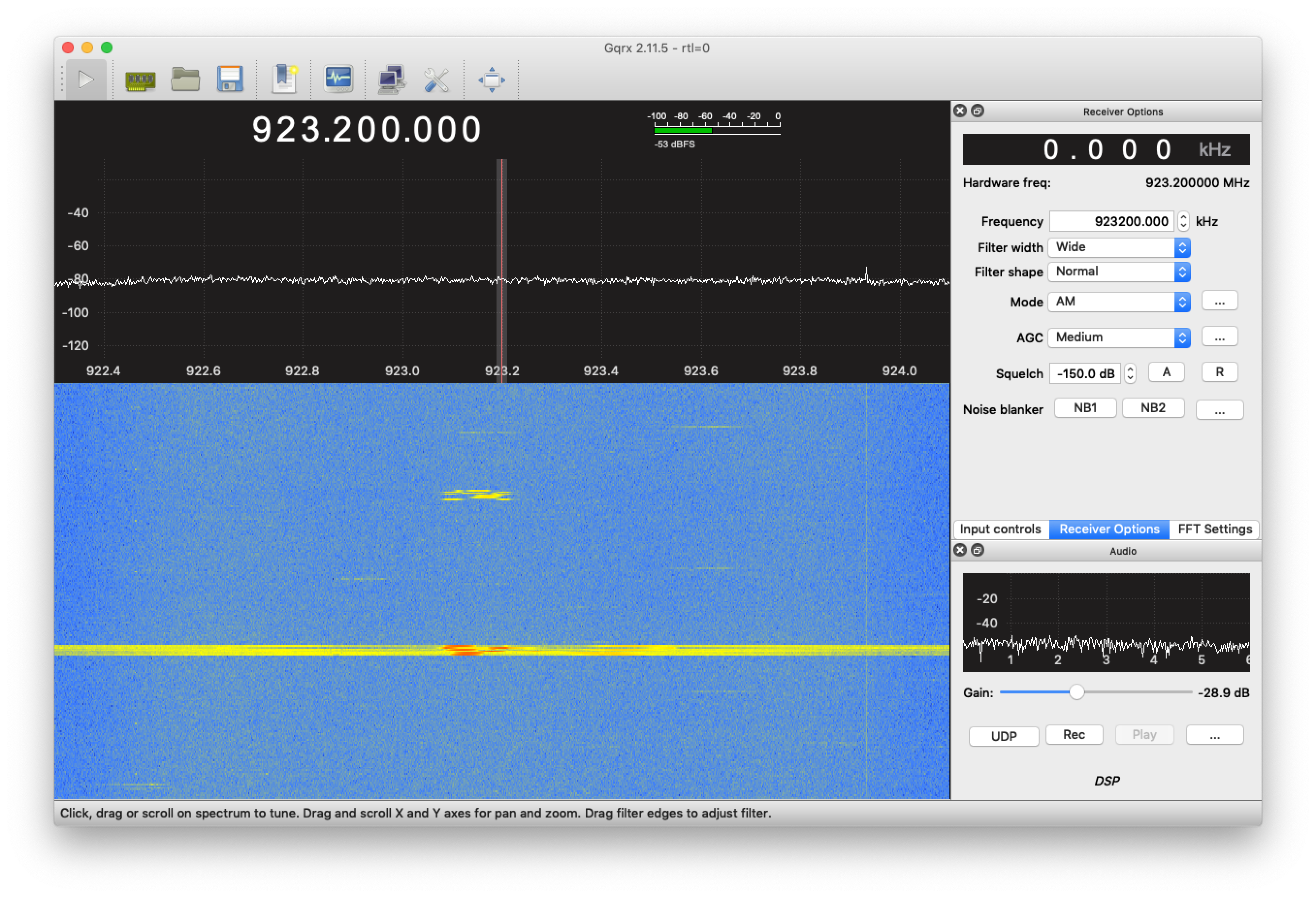

The only potential issue I can see is that the join-request occurs on 923.4MHz while the join-accept is sent on 923.2MHz. I can’t quite tell from Regional Parameters whether this is acceptable or not.

Eventually the join succeeds, somewhere between 1 and 75 attempts later. I haven’t pinned down a successful join yet to compare.

I see there are a lot of threads on unsuccessful joins, even ones about the join-accept never being received, but none of the advice appears to help. I’m generally led to threads on LMiC (such as LMiC does not receive Join Accept) which recommend calling LMIC_setClockError. That led me to generate, capture and carefully compare timestamps, but I couldn’t see any issues. If the issue were the join rx1 window opening a sub-second too early, maybe I wouldn’t see it.

Is there anything I can do to further pin down the issue? What could be going wrong between TTN sending the join-accept and the mDot/Seeeduino receiving it? Are others experiencing similar issues?

FWIW, after a successful join, the first 15 or so ADR downlinks get rejected by the node due to Channel Mask errors. After that they start to apply correctly and the LinkCheckReq messages also start working. Not sure if that’s related.