Absolutely. But only for debugging frequency, signal strength & gateway coverage which isn’t a daily thing.

Absolutely not. But it’s very useful for if/when an HTTP or MQTT integration has a glitch and you end up with a gap. And it’s brilliant for interactive development coupled with the JavaScript decoder.

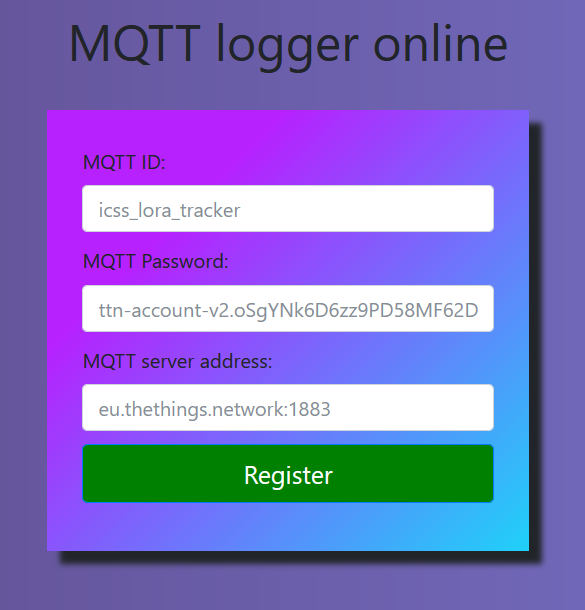

One of my desktop dashboard & management packages pulls the data from Data Storage under it’s own steam - so no remote server for HTTP/MQTT required, no internal MQTT process that can suffer, I can send an installer exe, they install and it just works.

Sure, long term data, trends, machine learning, all sorts come from lots of data points. But if you can organise a gateway & setup (or even create) devices, you should be able to cope with installing & configuring an appropriately documented PHP script on a low cost virtual host, or the same with Python.

There’s nothing wrong with your idea persay, but you need to go in to this with your eyes open and Ts&Cs that protect you so you can stop if it gets out of hand or limit peoples expectations about functionality & data assurance (backup & integrity). If you start charging, that’s when the pain begins and freemium as a road to riches is an urban myth - getting 5% of your users to pay is considered a success. But in this arena, as we see from some people travelling hopeful that TTN can service their commercial needs for free (absolutely fine, BTW) but with expectations of a responsive SLA (not here, use TTI), most TTN users are either tech self-service or aren’t working at a scale that they’d want to pay for data storage.

I think scaling is also something you need to consider. I’ve devices collecting 10 data points every 15 minutes, that’s 14,400 per day per device. Go for a smallish scale deployment, say 50 devices (I’m thinking refrigeration unit management at a distribution centre), that’s 720,000 data points per day plus the 72,000 raw records. One year on, you are storing 262,800,000 data points and a manager makes a mistake with a query that Access/Excel/Tableaux allows them to generate and it sets your database on fire.

You can consider raw data only, but at some point that has to be translated in to data points, so the user will need to download it, process it and put it in to a database, which rather diminishes the point of your proposal - they may as well use the 7 day Data Storage.

What is your primary goal here? Creating a community resource for self-deployment has merit. But a commercial venture needs a lot of consideration.

If this idea is inspired solely of the back of pernickety details in the HTTP or MQTT integrations (and there are some really good ways to suffer an exception that you didn’t expect to ever be a thing), start another topic and I’d hope we can get you up & running.