Hello!

I recently took a dive into LoRa. I have a SAM R34 Xplained Pro evaluation module and The Things Kickstarter Gateway (TTKG), both of which have been configured using the Console in The Things Stack: Community Edition. I used the LoRaWAN Mote Application provided by Microchip to test these devices.

The first time I tried to connect to TTN using the SAM R34 and TTKG was successful as I was using OTAA to join. However, being inexperienced, I turned off both devices at the end of the day in the hopes of using them the next day. I have since learned that OTAA requires a unique DevNonce field, which I will eventually figure out how to increment and store in the NVM of the SAM R34 (but that’s for another day). Since I am still learning/developing, I took some advice from the other forum posts to use ABP.

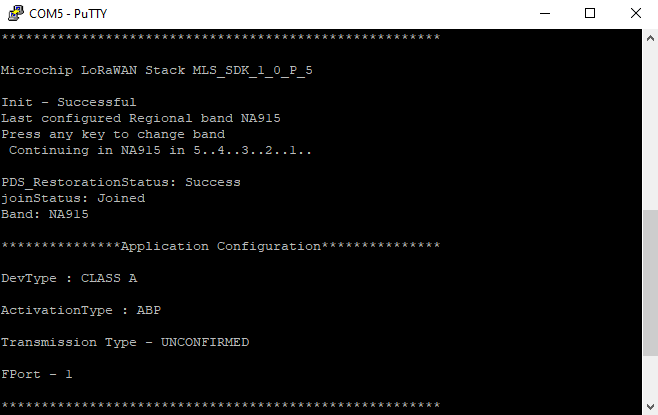

After setting up a new end device in the Console and hard-coding the required fields into the end device and the Console, the SAM R34 was able to “join” the network successfully and reports having done so in the terminal:

However, this is where I began to run into problems. I don’t see any uplink messages in the Live Data tab of the Console in the end device and see no incoming payloads in the Live Data tab of the Gateway (these were previously working when using OTAA). Furthermore, the Overview tab of the end device reports that there have been no uplink or downlinks and that there has never been any activity detected from the end device.

These are things I have tried:

- Triple-checking that I have all of the right EUIs and Keys on the Console and the SAM R34

- Made sure that the correct frequency (NA_915) and sideband (FSB 2) were being set on the Console, SAM R34, and Gateway

- Reprogramming the SAM R34

- Restarting the Gateway

Would anyone be able to shed some light on what I may be missing? Perhaps some documentation or configuration detail that I missed along the way?

Thank you all in advance!