Hello everyone,

I’m currently encountering an issue when trying and send any downlink messages to any devices in my current setup of TTN community in a kubernetes cluster. I have a parallel setup on docker compose which works just fine, I get responses from devices that I asked reports for.

Let me explain in detail my setup:

-

Multitech MTCDT-H5-210L Firmware 5.3.0 set up as packet forwarder 4.0.1-r32.0, which sends incoming messages to 1700 UDP port and receives messages from the same exposed port on kubernetes cluster on TTN container

-

Gateway is set up in TTN and ACK and statistics are correctly received, dumping on 1700 port on TTN I see packets coming back and forth

-

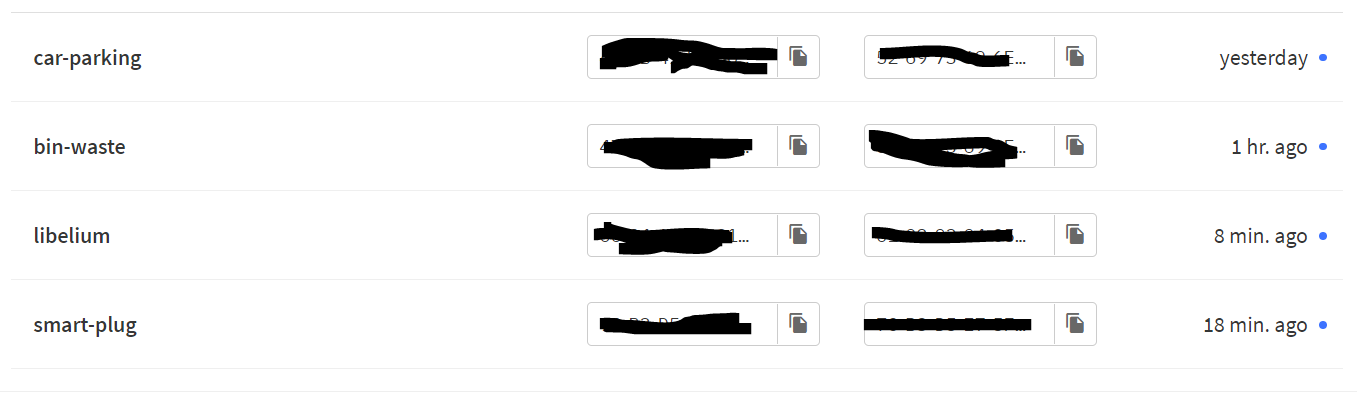

4 devices are set up and make join requests correctly.

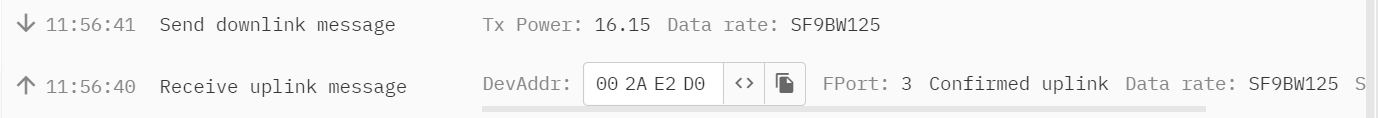

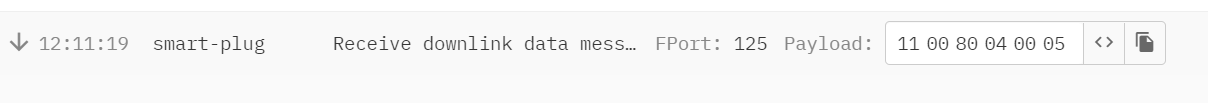

I’m trying to send downlink messages to smart plug, I can do it correctly using the docker-compose made instance, but when trying to do the same with the k3s made instance I get:

With no answer. Dumping on the 1700 port I see no messages exiting TTN pod.

On the application logs I get the following:

| DEBUG | Replace downlink queue | {device_uid: aqi.smart-plug, downlink_count: 1, grpc.method: DownlinkQueueReplace, grpc.service: ttn.lorawan.v3.AsNs, namespace: networkserver, request_id: 01G9HYN9ASXV9E2XXD5BTCDW7Q} |

|---|---|---|

| DEBUG | Replaced application downlink queue | {active_session_queue_length: 1, device_uid: aqi.smart-plug, grpc.method: DownlinkQueueReplace, grpc.service: ttn.lorawan.v3.AsNs, namespace: networkserver, pending_session_queue_length: 0, request_id: 01G9HYN9ASXV9E2XXD5BTCDW7Q} |

| DEBUG | Application downlink with no absolute time, choose unconfirmed network-initiated downlink slot | {active_session_queue_length: 1, device_uid: aqi.smart-plug, earliest_at: 1659533698.5994666, grpc.method: DownlinkQueueReplace, grpc.service: ttn.lorawan.v3.AsNs, namespace: networkserver, pending_session_queue_length: 0, request_id: 01G9HYN9ASXV9E2XXD5BTCDW7Q} |

| DEBUG | Add downlink task | {active_session_queue_length: 1, device_uid: aqi.smart-plug, grpc.method: DownlinkQueueReplace, grpc.service: ttn.lorawan.v3.AsNs, namespace: networkserver, pending_session_queue_length: 0, request_id: 01G9HYN9ASXV9E2XXD5BTCDW7Q, start_at: 1659533698.5994666} |

| INFO | Finished unary call | {duration: 0.0092, grpc.method: DownlinkQueueReplace, grpc.request.application_id: aqi, grpc.request.device_id: smart-plug, grpc.service: ttn.lorawan.v3.AsNs, namespace: grpc, peer.address: pipe, request_id: 01G9HYN9ASXV9E2XXD5BTCDW7Q} |

| DEBUG | Publish upstream message | {application_uid: aqi, namespace: applicationserver/io/pubsub, provider: *ttnpb.ApplicationPubSub_Mqtt, pub_sub_id: mosquitto-sub} |

| DEBUG | Handle downlink messages | {application_uid: aqi, count: 0, device_uid: aqi.smart-plug, namespace: applicationserver/io/pubsub, operation: replace, provider: *ttnpb.ApplicationPubSub_Mqtt, pub_sub_id: mosquitto-sub} |

| INFO | Finished unary call | {auth.token_id: MSEQY257U6WVPW7GCTVAR5NBXDUEUZVIN6V7XDY, auth.token_type: AccessToken, auth.user_id: admin, duration: 0.0313, grpc.method: DownlinkQueueReplace, grpc.request.application_id: aqi, grpc.request.device_id: smart-plug, grpc.service: ttn.lorawan.v3.AppAs, namespace: grpc, peer.address: pipe, peer.real_ip: 10.42.0.1, request_id: 01G9HYN99TQ1MW9B868D9D6YMZ} |

| INFO | Request handled | {auth.token_id: MSEQY257U6WVPW7GCTVAR5NBXDUEUZVIN6V7XDY, auth.token_type: AccessToken, duration: 0.0462, http.method: POST, http.path: /api/v3/as/applications/aqi/devices/smart-plug/down/replace, http.status: 200, namespace: web, peer.address: 10.42.0.7:39000, peer.real_ip: 10.42.0.1, request_id: 01G9HYN99TQ1MW9B868D9D6YMZ} |

| DEBUG | Replace downlink queue | {device_uid: aqi.smart-plug, downlink_count: 0, grpc.method: DownlinkQueueReplace, grpc.service: ttn.lorawan.v3.AsNs, namespace: networkserver, request_id: 01G9HYN9B9ATK9N84HH4AEEPQY} |

| DEBUG | Replaced application downlink queue | {active_session_queue_length: 0, device_uid: aqi.smart-plug, grpc.method: DownlinkQueueReplace, grpc.service: ttn.lorawan.v3.AsNs, namespace: networkserver, pending_session_queue_length: 0, request_id: 01G9HYN9B9ATK9N84HH4AEEPQY} |

| INFO | Finished unary call | {duration: 0.0026, grpc.method: DownlinkQueueReplace, grpc.request.application_id: aqi, grpc.request.device_id: smart-plug, grpc.service: ttn.lorawan.v3.AsNs, namespace: grpc, peer.address: pipe, request_id: 01G9HYN9B9ATK9N84HH4AEEPQY} |

| DEBUG | Process downlink task | {device_uid: aqi.smart-plug, namespace: networkserver, start_at: 1659533698.5994666, started_at: 1659533698.6048272} |

| DEBUG | No available downlink to send, skip downlink slot | {band_id: EU_863_870, dev_addr: 01B46147, device_class: CLASS_C, device_uid: aqi.smart-plug, downlink_type: data, earliest_at: 1659533698.6098716, frequency_plan_id: EU_863_870_TTN, namespace: networkserver, started_at: 1659533698.6048272} |

In a nutshell, “No available downlink to send, skip downlink slot”. I’ve looked into the code and I’ve found that this kind of message is gotten only if dev.GetSession().GetQueuedApplicationDownlinks() is empty.

(lorawan-stack/utils.go at 951998d836020e46a79160cef3006e0995fad220 · TheThingsNetwork/lorawan-stack · GitHub) LINE 320 and LINE 370

What might be causing this?

Other issues I’ve had in the past regarded pointers to REDIS instance for example. I had to specify

- name: TTN_LW_REDIS_ADDRESS

value: "stack-redis-master:6379"

THEN (Not explicitly documented)

- name: TTN_LW_EVENTS_REDIS_ADDRESS

value: "stack-redis-master:6379"

- name: TTN_LW_CACHE_REDIS_ADDRESS

value: "stack-redis-master:6379"

These defaults (localhost:6379) would work fine on docker-compose, but in k3s localhost:6739 doesn’t point to redis and would break.

I haven’t found any other environment variable that is related to the scheduler and might be causing this issue.

Moreover, I am not getting any error message that would point to such misconfiguration.

What tools might help debugging this issue?

Do you have any idea what might be causing this?

Thank you and best regards,

Daniele