If you can share the data sources, types & sizes we may be able to help in more detail. Are you packing the payload for battery savings or because you have a lot of data to move?

A simple compression scheme is best evaluated by experimenting with - trying various ways of generating a common tree which may, with bit packing and different values, benefit at the nibble (4-bit) level. Quite often the grouping or order of the payload will make a difference.

Rather like solving a Suduko or routing out a PCB, looking at the data for patterns may reveal something useful, so lots of sample data on screen can help. Watch any of the Enigma films for inspiration.

You could look at rate of change as well, and using the suggested port number scheme above, send payloads with different data at different intervals depending on how quickly it changes.

I’ve used a byte as 8 flags to indicate which fields I’m sending, so if something hasn’t changed since last transmission or since the last packet was appended, a 0 indicates that field isn’t being included. If you’ve 8 integer (2 byte) values and half don’t change, you need only have a single flag byte plus 4 x 2 bytes = 9 rather than sending 8 x 2 = 16. If you use the a bit as a flag & a bit for a sign, you can take a 2 byte field and have it ±64 as a change.

Alternatively, if you have a happy zone for a value that is only going to be aggregated / decimated almost as soon as it’s in your database, you can do that on the device - I rarely end up showing more detail than an hourly average for acceptable values so in theory I could have the device send the averages for me. If the value is close to boundary or in an alarm condition, then I can code to have it send the detail. Or send the average when it’s acceptable and not changing at more than a certain rate, and switch over to detail if there is a sudden (but smoothed) spike in reading.

The device could save all the readings for a time period and use a variety of schemes to get the best value out of the payload. You can then employ a mechanism that allows you to request a set of data at various levels of detail if you need it.

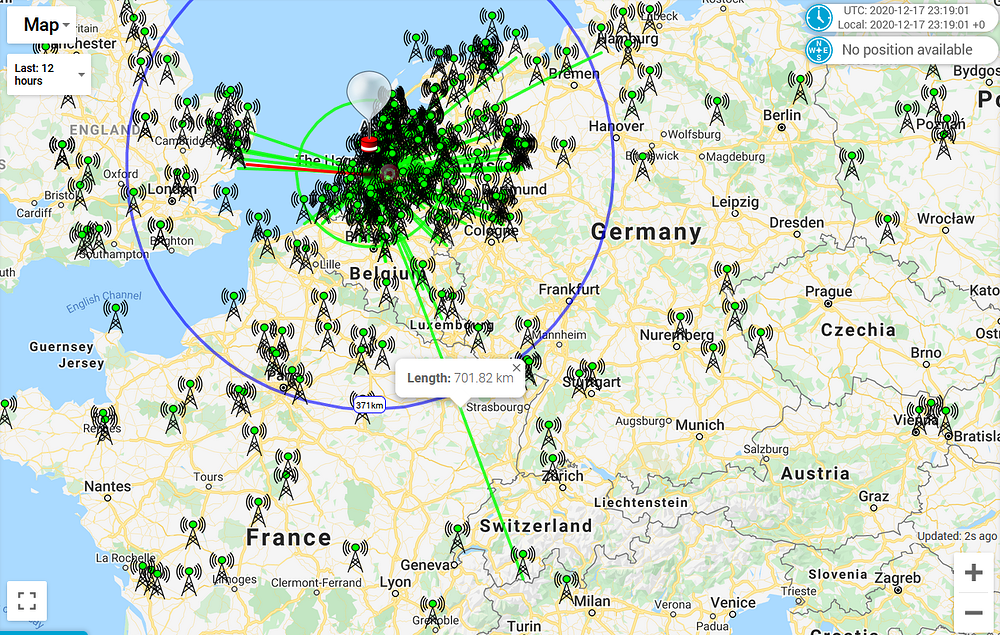

If you have GPS data, setting your own geographic reference point or having a confirmed uplink for a daily reference point will then allow deltas to be sent.

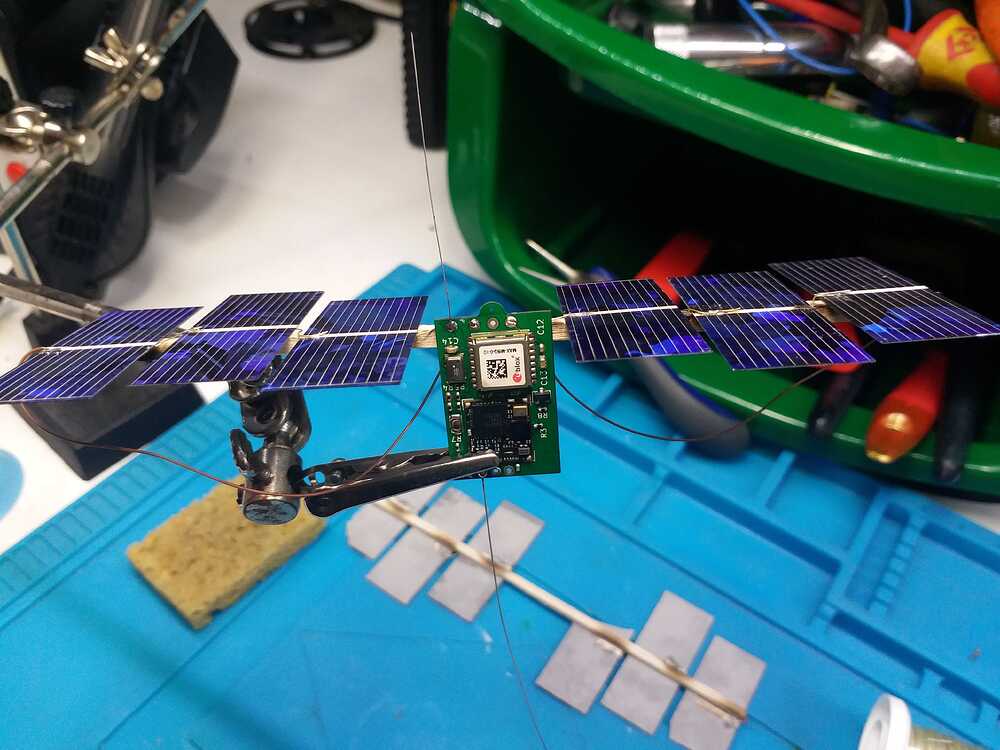

If you have the time, patience and some scrupulous documentation, combining a range of schemes can produce some remarkable results.