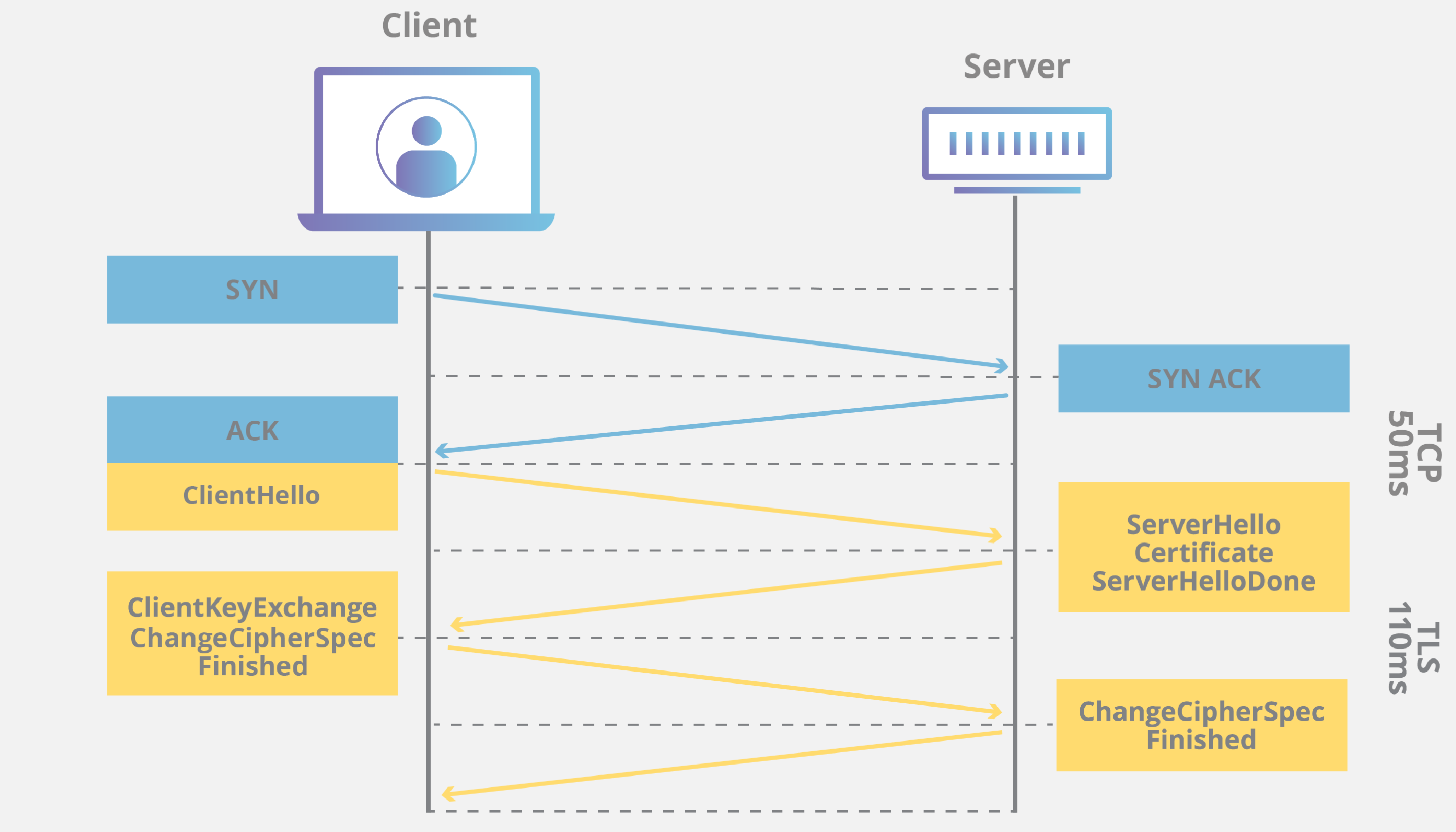

A mqtt connection is persistent. For a https request, there must first be the three-way TCP handshake, then the TLS handshake, before the request can be sent.

Since mqtt performs the tcp and tls handshakes once (when establishing the connection), the connection is available for sending data immediately when data comes in from the device.

Cloudflare has a good description:

https://www.cloudflare.com/learning/ssl/what-happens-in-a-tls-handshake/