@Jeff-UK so I discovered today loss in another situation…

Device and App registered to EU1v3, one gateway in reach, also registered to EU1v3.

Gateway is a Mikrotik UDP gateway again (yes I know, UDP…) with LTE (yes I know, LTE, baaaad!).

I see the uplink from the node in the TTN gateway ‘Live data’ tab, but not in the TTN device ‘Live tab’.

Also, the packet is missing on all my three Webhook endpoints (which are in different datacenters).

So for me it looks cleary that TTN is loosing messages within their own system which should not happen, at least not on a regular basis… and no packet broker involved… and yes, the packet arrived at TTN side

Metadata of the correctly processed uplink:

{

"name": "gs.up.receive",

"time": "2021-08-31T20:03:49.745908419Z",

"identifiers": [

{

"gateway_ids": {

"gateway_id": "hir-ttn01v3"

}

},

{

"gateway_ids": {

"gateway_id": "hir-ttn01v3",

"eui": "4836372047001D00"

}

}

],

"data": {

"@type": "type.googleapis.com/ttn.lorawan.v3.UplinkMessage",

"raw_payload": "QEIKCyYA3CcBE01ET2RMCTrWtOHygexSMP0=",

"payload": {

"m_hdr": {

"m_type": "UNCONFIRMED_UP"

},

"mic": "7FIw/Q==",

"mac_payload": {

"f_hdr": {

"dev_addr": "260B0A42",

"f_ctrl": {},

"f_cnt": 10204

},

"f_port": 1,

"frm_payload": "E01ET2RMCTrWtOHygQ=="

}

},

"settings": {

"data_rate": {

"lora": {

"bandwidth": 125000,

"spreading_factor": 7

}

},

"coding_rate": "4/5",

"frequency": "868300000",

"timestamp": 2394773225,

"time": "2021-08-31T20:03:50.991572Z"

},

"rx_metadata": [

{

"gateway_ids": {

"gateway_id": "hir-ttn01v3",

"eui": "4836372047001D00"

},

"time": "2021-08-31T20:03:50.991572Z",

"timestamp": 2394773225,

"rssi": -73,

"channel_rssi": -73,

"snr": 9.25,

"uplink_token": "ChkKFwoLaGlyLXR0bjAxdjMSCEg2NyBHAB0AEOmt9fUIGgwIpZa6iQYQ8dDP4wIgqPztnNnrHQ==",

"channel_index": 1

}

],

"received_at": "2021-08-31T20:03:49.745793649Z",

"correlation_ids": [

"gs:conn:01FEB3H9WXPK7GD0AZ7K6AZVRK",

"gs:uplink:01FEEWZ2VHYCPYDT6SE3J9PJYT"

]

},

"correlation_ids": [

"gs:conn:01FEB3H9WXPK7GD0AZ7K6AZVRK",

"gs:uplink:01FEEWZ2VHYCPYDT6SE3J9PJYT"

],

"origin": "ip-10-100-5-46.eu-west-1.compute.internal",

"context": {

"tenant-id": "CgN0dG4="

},

"visibility": {

"rights": [

"RIGHT_GATEWAY_TRAFFIC_READ",

"RIGHT_GATEWAY_TRAFFIC_READ"

]

},

"unique_id": "01FEEWZ2VHFM930T1MTXMT9QFX"

}

Metadata of the missing uplink:

{

"name": "gs.up.receive",

"time": "2021-08-31T20:08:49.148113249Z",

"identifiers": [

{

"gateway_ids": {

"gateway_id": "hir-ttn01v3"

}

},

{

"gateway_ids": {

"gateway_id": "hir-ttn01v3",

"eui": "4836372047001D00"

}

}

],

"data": {

"@type": "type.googleapis.com/ttn.lorawan.v3.UplinkMessage",

"raw_payload": "QEIKCyYA3ScBkpJWAk1cyoAv+Gti376IhpE=",

"payload": {

"m_hdr": {

"m_type": "UNCONFIRMED_UP"

},

"mic": "voiGkQ==",

"mac_payload": {

"f_hdr": {

"dev_addr": "260B0A42",

"f_ctrl": {},

"f_cnt": 10205

},

"f_port": 1,

"frm_payload": "kpJWAk1cyoAv+Gti3w=="

}

},

"settings": {

"data_rate": {

"lora": {

"bandwidth": 125000,

"spreading_factor": 7

}

},

"coding_rate": "4/5",

"frequency": "868500000",

"timestamp": 2694196729,

"time": "2021-08-31T20:08:50.415727Z"

},

"rx_metadata": [

{

"gateway_ids": {

"gateway_id": "hir-ttn01v3",

"eui": "4836372047001D00"

},

"time": "2021-08-31T20:08:50.415727Z",

"timestamp": 2694196729,

"rssi": -78,

"channel_rssi": -78,

"snr": 8.25,

"uplink_token": "ChkKFwoLaGlyLXR0bjAxdjMSCEg2NyBHAB0AEPnb2IQKGgsI0Zi6iQYQrZnIRiCoqY7VtPQd",

"channel_index": 2

}

],

"received_at": "2021-08-31T20:08:49.147983533Z",

"correlation_ids": [

"gs:conn:01FEB3H9WXPK7GD0AZ7K6AZVRK",

"gs:uplink:01FEEX877WCQEYNSYM67DA29CP"

]

},

"correlation_ids": [

"gs:conn:01FEB3H9WXPK7GD0AZ7K6AZVRK",

"gs:uplink:01FEEX877WCQEYNSYM67DA29CP"

],

"origin": "ip-10-100-5-46.eu-west-1.compute.internal",

"context": {

"tenant-id": "CgN0dG4="

},

"visibility": {

"rights": [

"RIGHT_GATEWAY_TRAFFIC_READ",

"RIGHT_GATEWAY_TRAFFIC_READ"

]

},

"unique_id": "01FEEX877W5FJJ11Z7ED57Z1CS"

}

And the screenshots:

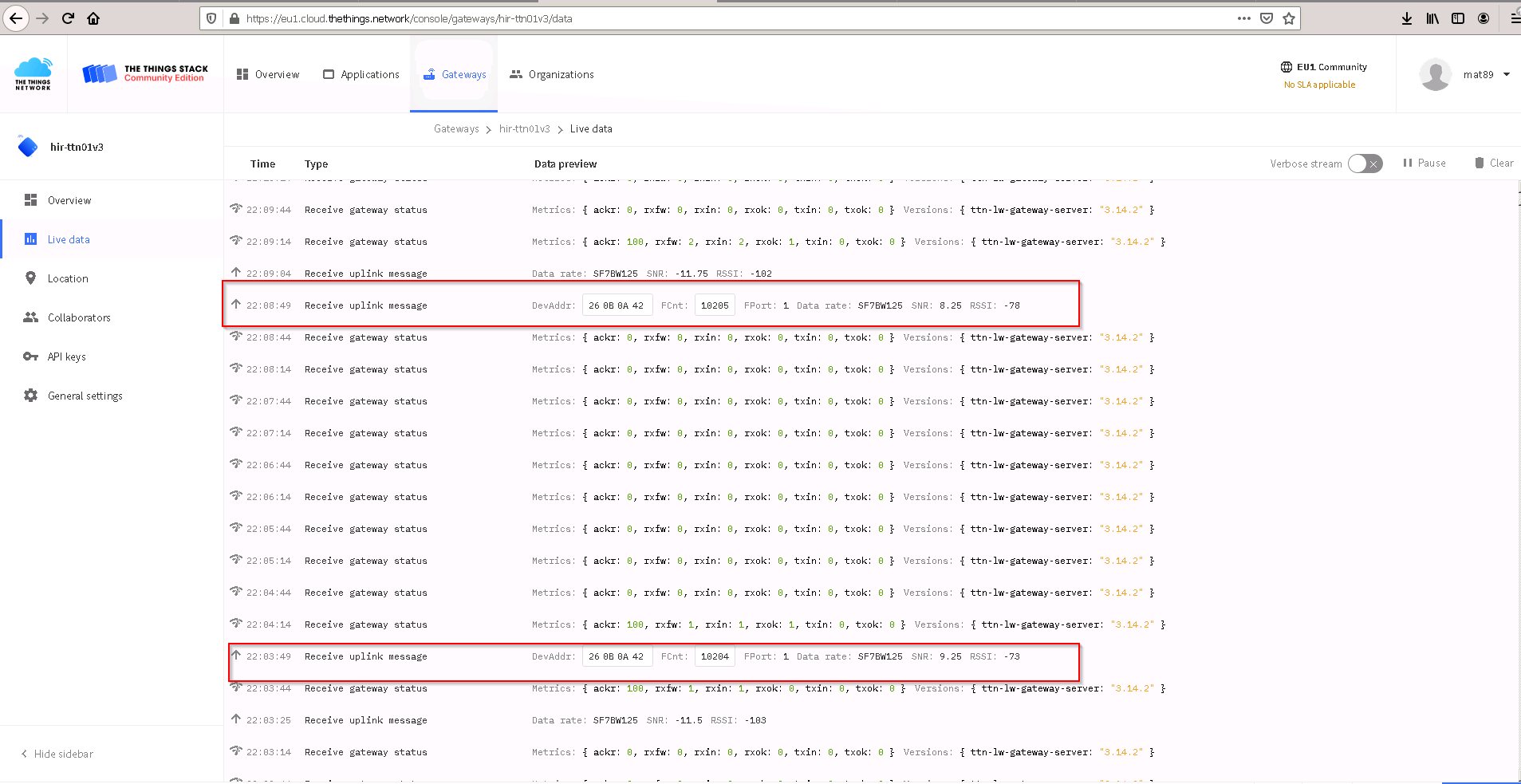

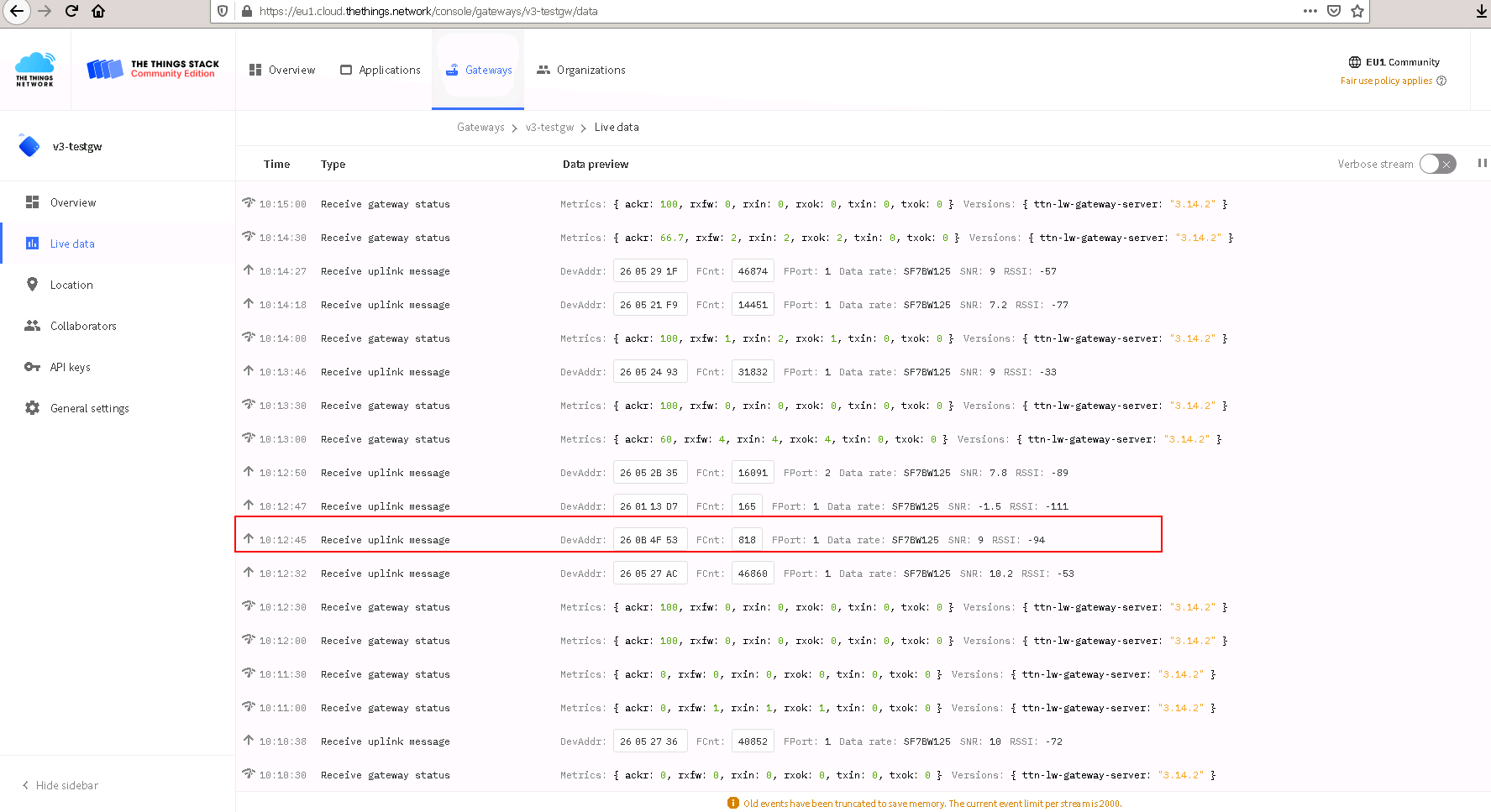

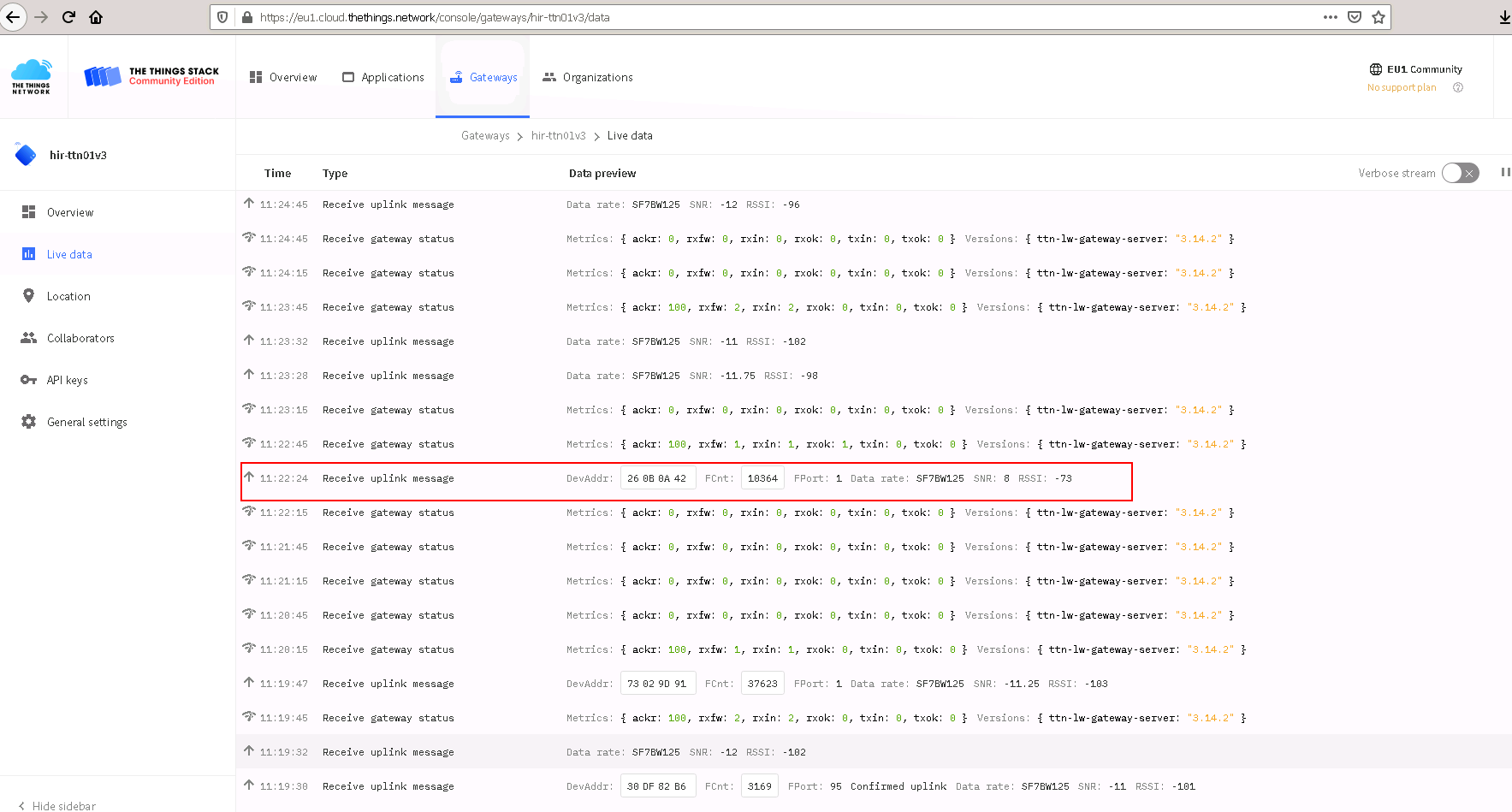

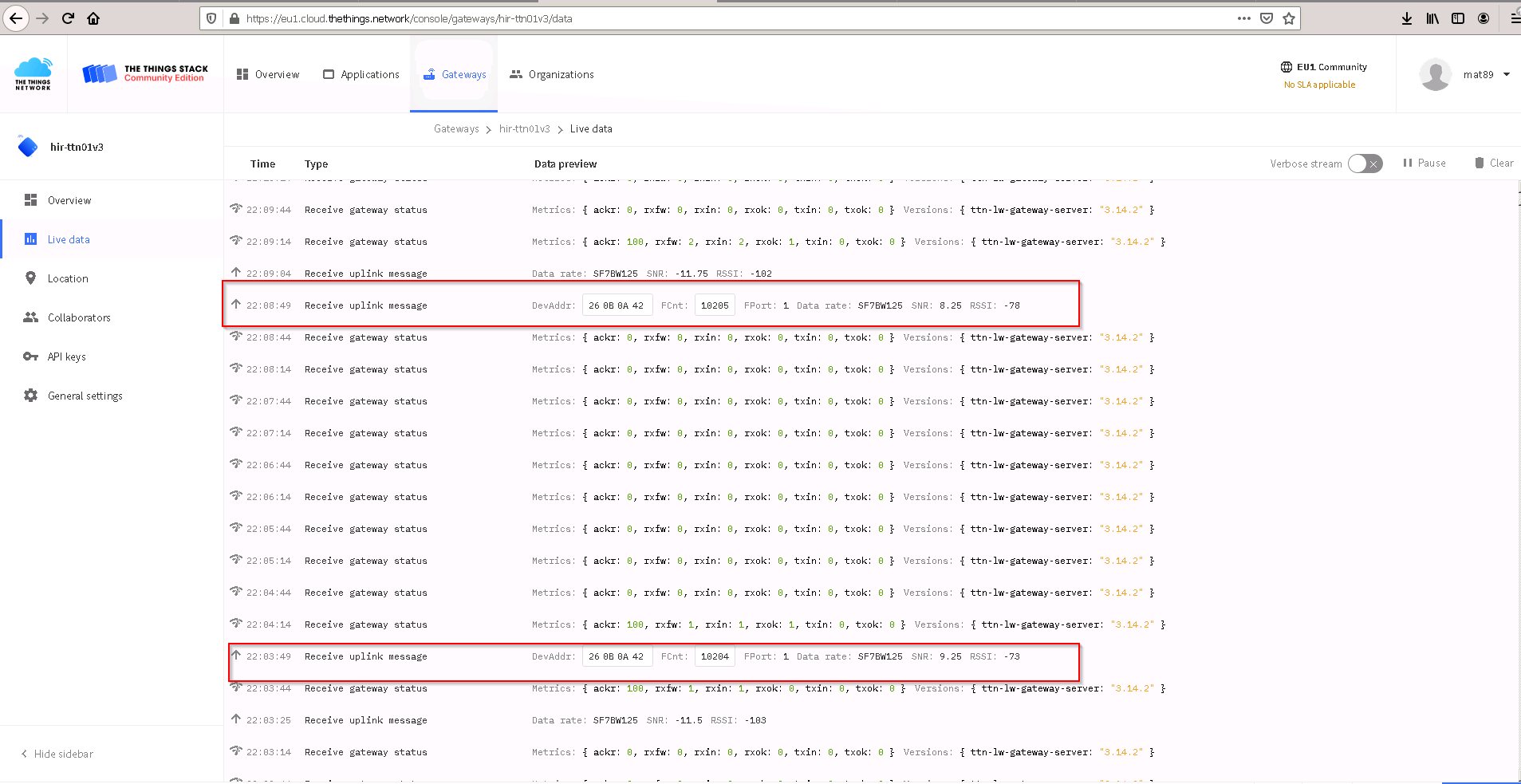

Gateway live data:

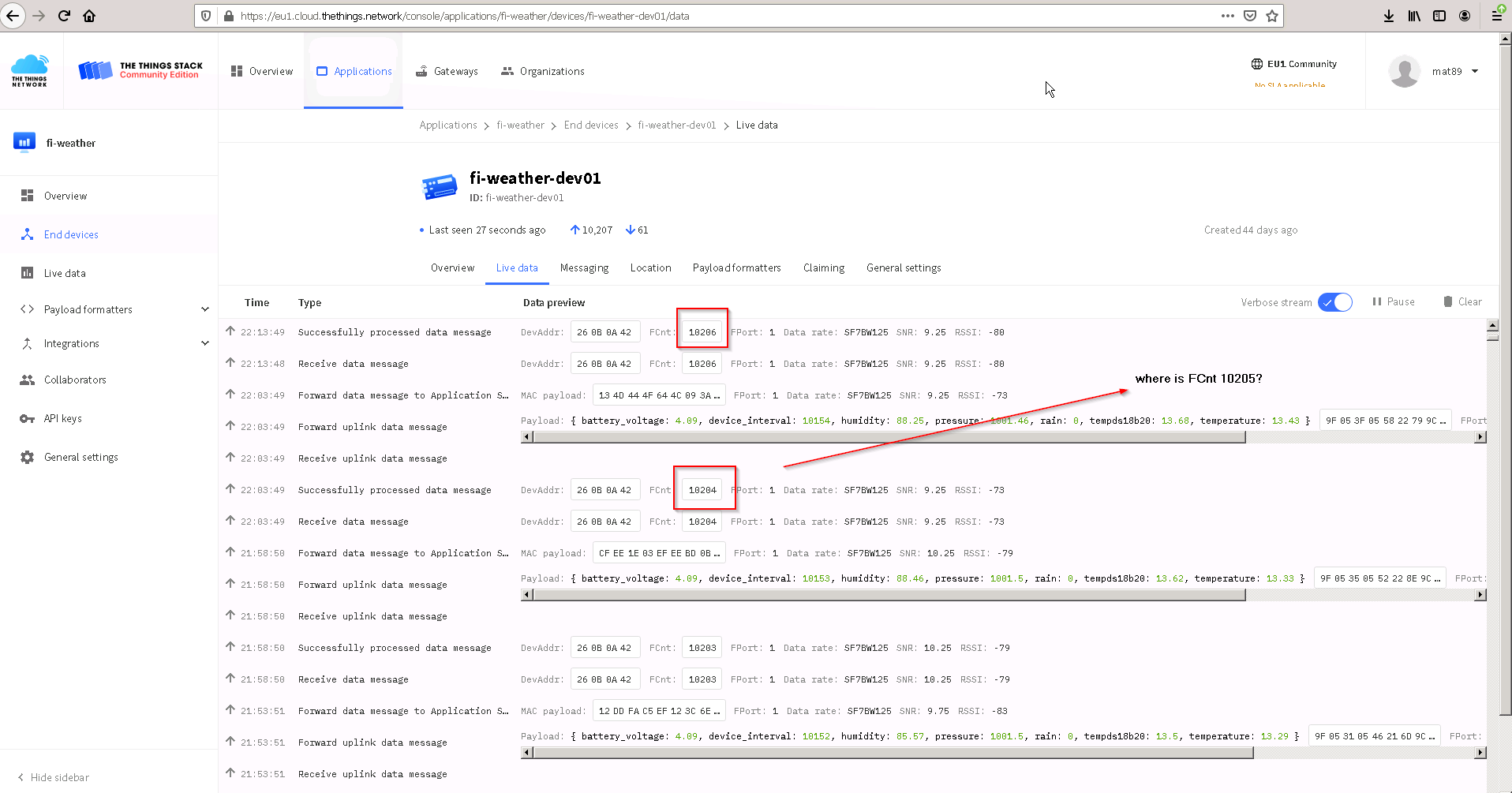

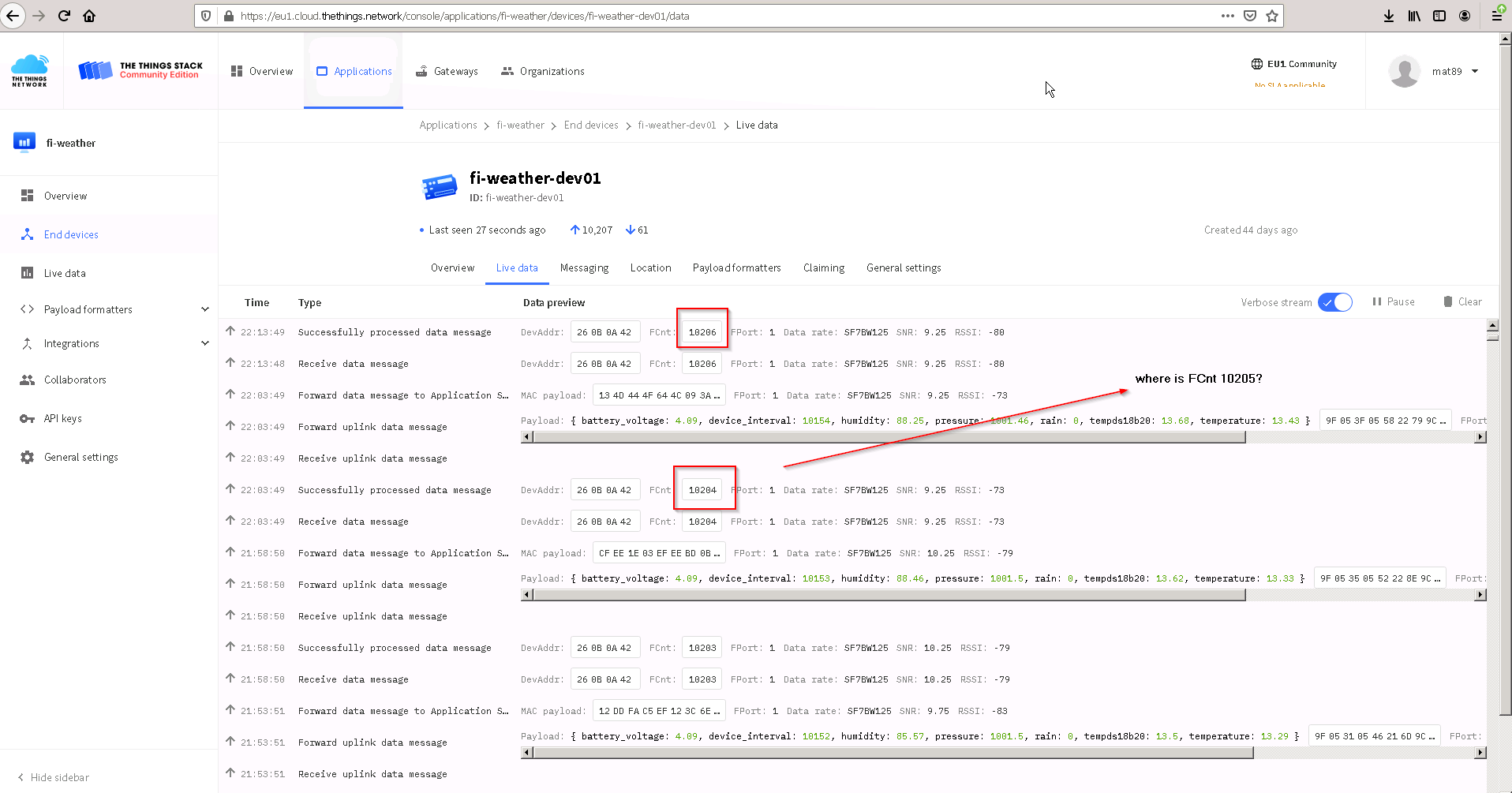

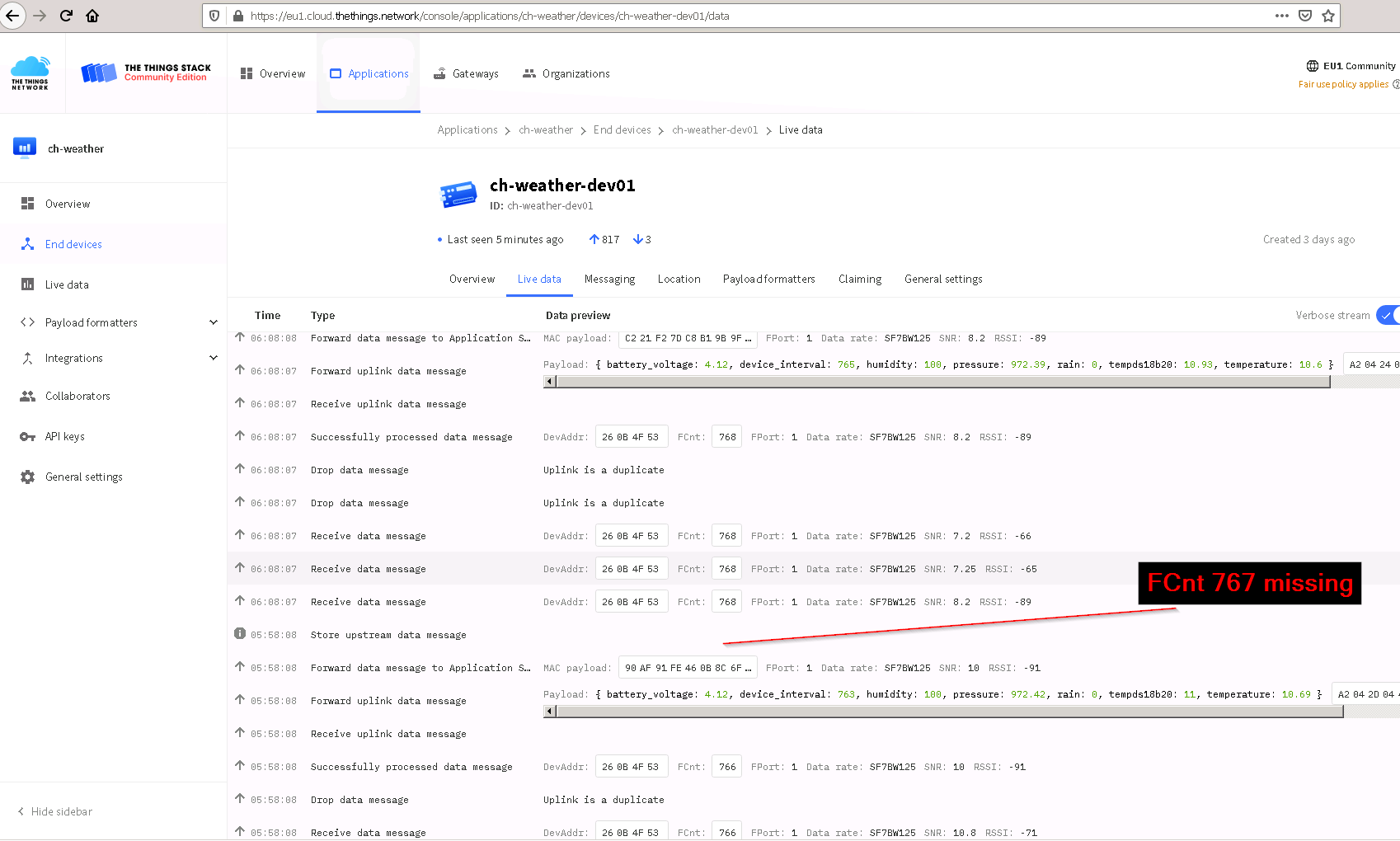

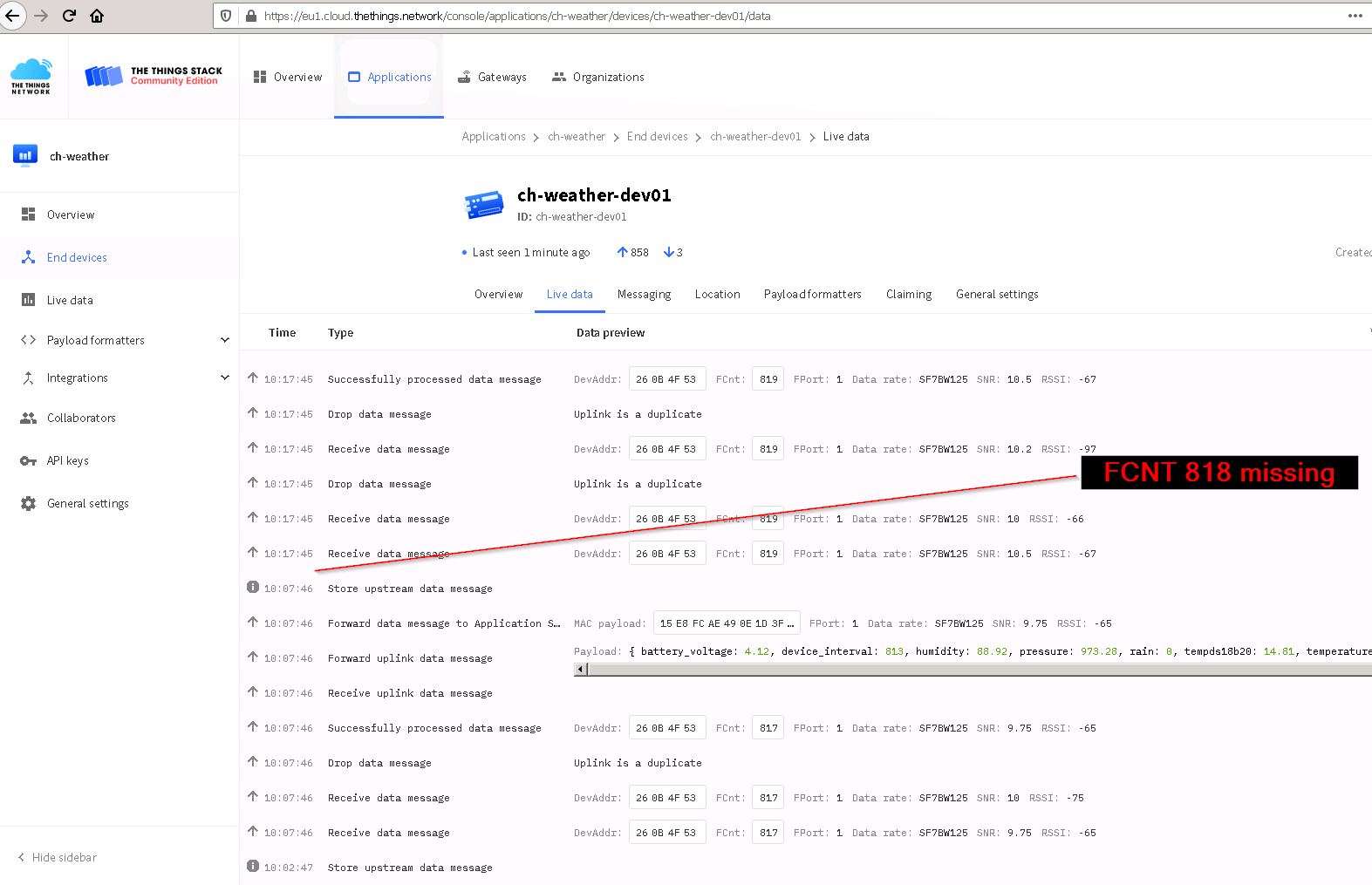

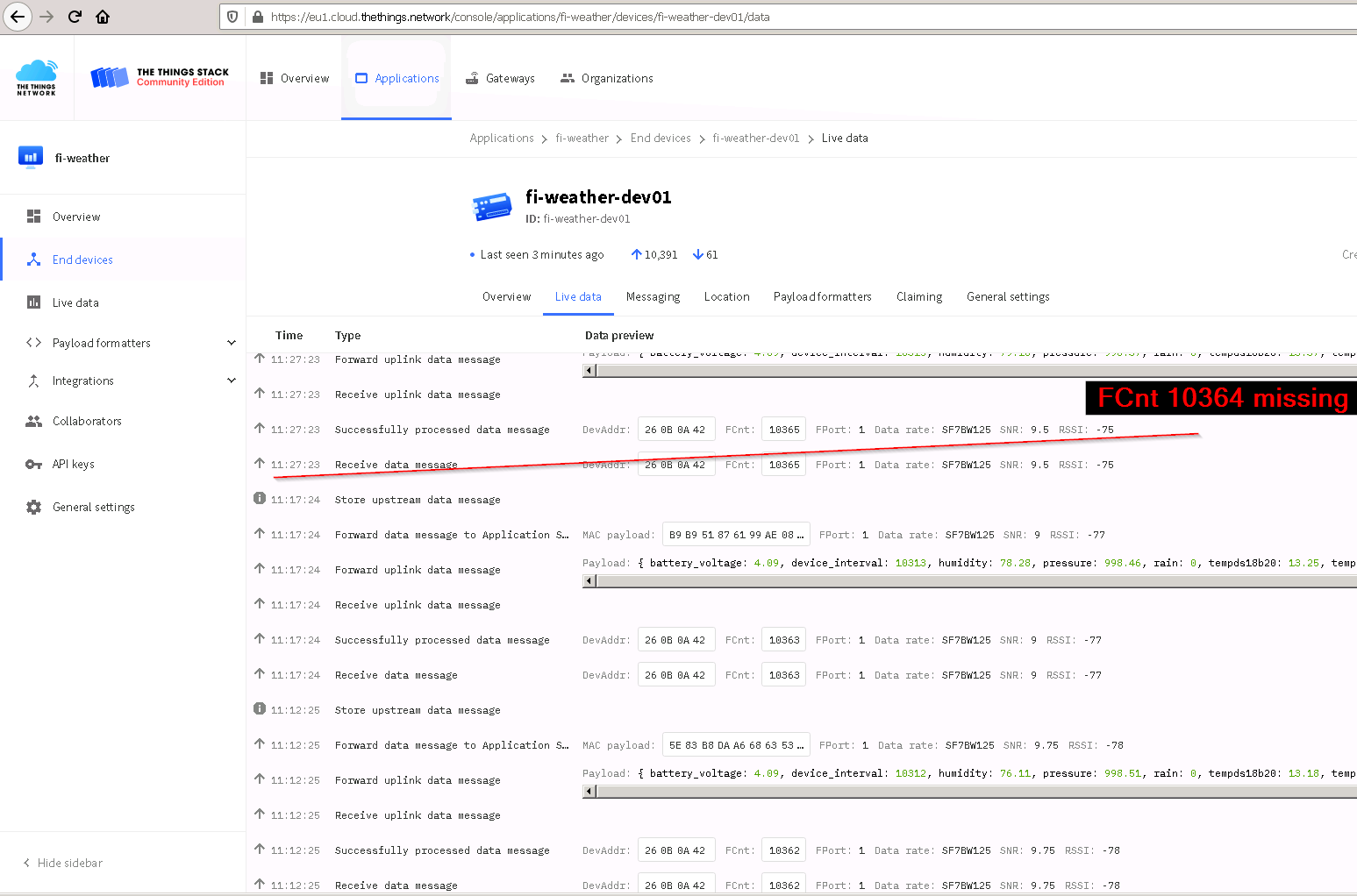

Device live data: